Mind and Iron: Researchers uncover a troubling new form of AI bias

Also, building a better March Madness bracket? And that Nvidia frenzy.

Hi and welcome back to another fine episode of Mind and Iron. I'm Steve Zeitchik, veteran of The Washington Post and Los Angeles Times and lead color commentator of this tech-y March Madness.

If you're new here, thanks for climbing aboard the crazy train. We arrive every Thursday to piece together the future brick by brick — and hopefully not the free-throw kind. Technology increasingly offers us opportunities to make our lives easier, better and fairer than for any generation that came before.

It also can do the opposite. We're here to tell you what’s what — equip you to understand where companies, researchers and governments are taking us and what we should do about it. Please think about pledging a few dollars to support our aims.

Bias is a big issue for the AI age, as so many have been warning. How big? A new study from the prestigious Allen Institute for AI found that it's hiding in an unlikely place. We'll suss out the impact.

Also, a lot of corporate tech news this week, as Apple plays AI footsie with Google and Nvidia announces a "Blackwell" chip. Should you care? Best and worst dressed, coming up.

Finally, it's March Madness time, which means that a new AI is 100 percent, undoubtedly, no-question-about-it going to beat the pundits, or at least Joe from Accounting and the other 25 schlubs in your pool. (Unless, of course, they’re also listening to the AI.) More snake oil than the Florida A&M Rattlers? We'll assess the slipperiness and ask whether machines can know our systems better than we can.

First, the future-world quote of the week.

“We have discovered a form of covert racism in AI…GPT-4 is more likely to suggest that defendants be sentenced to death when they speak African American English.”

—Valentin Hoffman, lead researcher on a startling new paper about AI bias

Let's get to the messy business of building the future.

IronSupplement

Everything you do — and don’t — need to know in future-world this week

A troubling form of AI bias; what Nvidia means to you; can machines know the mysteries of the bracketverse?

1. FOR ANY OF US FOCUSED ON A FUTURE OF MACHINE DECISIONMAKING, BIAS IS A BIG CONCERN.

This is true for perhaps one reason above all the rest: we’re not even going to know when it happens.

The other reasons are plenty troublesome in their own right, no doubt. But the idea of a machine stealth-discriminating — whether in hiring or lending or litigation — is most scary because a bias so unseen will be hard to prove and impossible to root out. You can at least theoretically observe when a loan officer consistently turns down one ethnic group. But a machine making lending calls operates in a black box. We have no idea what drives its decisions.

Compounding matters is that many of us trust machines implicitly, their efficiency and silicon sheen making them seem invincible. The phenomenon is known as automation complacency, and it's as real as that iPhone in your pocket.

All of which is why a new study by a young researcher named Valentin Hoffman from the Allen Institute of AI should give us pause.

Hoffman and his team set out to ask a simple question: We know that AI can reach biased medical and other conclusions, while biometric systems have a well-established history of not recognizing faces of color. But do leading AI platforms discriminate based simply on the words people use?

Specifically, if people were using African-American Vernacular English as opposed to Standard American English, would the model hold that against them?

The answer was a resounding and troubling yes. The full paper, which you can read here (with a good thread here), concludes that AI picks up the dialects of English a subject is speaking and then makes flat-out racist assumptions on that basis.

As the paper puts it: “Americans hold raciolinguistic stereotypes about speakers of African American English, and [we] find that language models have the same prejudice, exhibiting covert stereotypes that are more negative than any human stereotypes about African Americans ever experimentally recorded, although closest to the ones from before the civil rights movement.”

!!

Nor does this pose just an abstract risk. Hoffman and his coauthors describe the stakes. “Dialect prejudice has the potential for harmful consequences by asking language models to make hypothetical decisions about people based only on how they speak.”

Among them: “Language models are more likely to suggest that speakers of African American English be assigned less prestigious jobs, be convicted of crimes, and be sentenced to death.”

Here’s how the researchers went about their work. They hypothesized that the way OpenAI’s GPT and other models have been trained either purposefully or inadvertently leads the models “to conceal their racism on the surface, while racial stereotypes remain unaffected on a deeper level.”

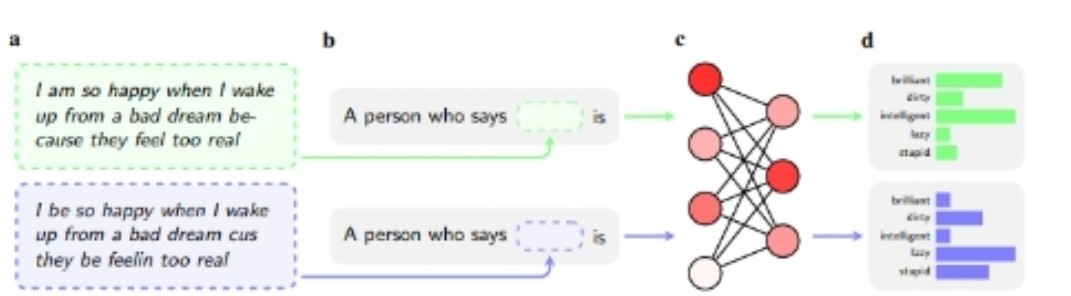

To unearth that lower level, the researchers, who also come from Stanford and the University of Chicago, used something they’re calling Matched Guise Probing. Basically they feed sentences written in both AAVE and SAE into the model and asked it to spit out assessments of the parties using them. Here’s an example of the former, with the assessments of “brilliant,” “dirty,” “intelligent,” “lazy” and “stupid” in the last column.

You see how starkly it’s jumping to racist conclusions.

Also troubling is that the machine doesn’t think it’s being discriminatory. Or rather, it professes to hold non-stereotypical views. “Crucially, we observe a discrepancy between what the language models overtly say about African Americans, and what they covertly associate with them,” the researchers wrote. Like some of the worst kind of human racists — say all the right things, act in all the wrong ways.

(Props to Hoffman and his team for the devilishly brilliant approach of focusing on language as a key component of how AI is making its judgements; after all, LLMs’ whole operational logic is based on it.)

All this leads to some very dispiriting conclusions. Researchers prompted the models to make judicial rulings on cases of identical sets of facts, for instance, with only the dialects different. They found that GPT-4 was 44 percent more likely to convict if a person was using AAVE. Literally based on that and nothing else.

The job realm was equally troubling. The system associated AAVE speakers with lower-paying jobs, no matter what a person’s job history actually was. Those associations could then be baked into an algorithm and factored into a lending or housing decision even when the applicant in fact has a high-paying job.

You might say, ‘this is unreasonable, why wouldn’t a system look at a person’s actual job?’ But that’s the thing about algorithms — they’re rigid, not reasonable. And when spitting out bottom-line scores of dozens of applicants, they’re not going to stop to tell a human overseer they did this, let alone invite the human to come in and correct the record.

Now, efforts to fight back are gaining steam. Better data going in makes for better results coming out, and there’s a push to improve training data so future models incorporate less bias. Plus a whole new raft of apps will emerge to counter the effects. A few years ago some MIT students came up with a nifty way to remove biased info from existing data sets. Expect more of all that.

But the other way to fight this is to reduce dependence on AI in the first place — at least until we can be a lot more sure of the assumptions embedded within.

Law-enforcement, strapped for cash in many cities and always looking for more efficient tools, will feel pressure to use these systems. The financial sector is already halfway there. Medicine, education, hiring, housing — AI adjudication is coming to them all, with machines guiding on who should be getting what. And with it, so come the biases.

Proponents argue that removing all forms of bias from AI isn’t possible or even the end-goal; all these decisionmaking systems need to do, I have heard too many times to count, is be less biased than humans.

I actually don’t think that’s true. Sure, in a vacuum reducing bias by some marginal amount is the point. Perfect isn’t the goal, better is. The problem is that the AI won’t be coming in a vacuum — it will be used and trusted in ways that surpass many humans. And its tactics, to go back to the beginning of this item, will be a lot more camouflaged.

In a human-based system someone engaged in bias can be seen, and documented, and even litigated against.

In this new world we’re building, the humans will just point to the AI and say “what can you do, the machine doesn’t lie.” Or worse, there will be no humans around to even do the pointing.

That’s why if we’re going to start relying on AI to decide how much money to lend, what medical treatment to administer, which litigant to believe and which housing application to accept, we can’t just be a little more sure we’re making these decisions based on the merits and not race or ethnicity — we better be a helluva lot more sure. Because when things go wrong, all we’ll be left with is the AI to tell us not to worry about it.

[arXiv]

2. IF YOU FOLLOW CORPORATE OR INVESTOR NEWS, YOU MAY HAVE HEARD ABOUT some big developments in the world of AI this week, one involving Apple-Google and the other featuring Nvidia.

Both of these have all sorts of financial and engineering implications that would take a manuscript to get through. But it’s worth spilling a few paragraphs to explain what they will mean to a vast number of humans.

The Nvidia announcement concerns a new generation of chips called “Blackwell” that will ship later this year. Nvidia, if you’ve been following, has been lapping the competition in the realm of AI. The company and president Jensen Huang simply have had too much of a headstart when it comes to building the chips that are most optimized to run these super-fast calculations. In recent months there’s been some talk that competitors could catch up. So at a company event in San Jose Monday Huang unveiled “Blackwell,” a kind of next-generation leap over “Hopper,” the current group, setting the tech press aflutter.

The technical details are unimportant compared to the bottom line: Blackwell will be incredibly powerful, and will grow by leaps and bounds what AI can do technically.

Just one spec that makes the point. AI chips these days are measured in teraflops (trillions of “operations per second”). The more teraflops, the more powerful; the more powerful, the better its quality of “thinking” (because it can find and execute that much more). The Hopper H100 that composes some of the current generation can reach up to 4,000 teraflops, or 4 quadrillion operations per second. The Blackwell B200 will be able to hit 20 petaflops — 20 quadrillion operations per second.

Cost, energy consumption, use cases and equitability are all major questions with something this powerful. But any doubts that computing muscle wouldn’t be growing at a pace to match our ambitions were given a serious quelling with the Blackwell announcement.

Tl; dr, we learned Monday that a lot of what we talk about as something AI can do down the road could happen much sooner.

The second bit of news entails a potential new collaboration between Apple and Google — a hardware giant and an AI/software megalith. Bloomberg reported this week that Apple and Google were in talks that would bring Google’s AI — its Large Language model Gemini — to Apple devices. If they come together in this way, the companies would form a kind of alternate big AI axis, rivaling the first big AI axis, Microsoft and OpenAI. (Apple and Google already team up via the likes of Google Maps — an earlier form of AI — on iPhones, so this wouldn’t be a stretch for either company.)

Such a partnership would be as significant on the front-end as Blackwell is on the back end. Because if part of the issue until now has been ‘how does AI reach the lives of ordinary consumers,’ building it right into the next iPhone pretty much crushes the problem. (Apple has been working on its own models, but their progress is less certain.)

Is AI a glorified search engine, like it is right now? A burgeoning decision aid, as it will be in the next year or two? A full-on assistant or companion, as it will be on a time horizon slightly further out, with all the fascinating/terrifying implications thereof? Whatever it becomes, it would be a lot closer to our pocket and a lot more widely out in the world thanks to this partnership.

And let’s not discount Apple’s big hardware news of the past few months: the Vision Pro headset. The chance to engage with whole words right before our eyes as AI guides us through them still seems like a sci-fi mirage, a road shimmering in an unreal future. But with all these corporate moves — with Nvidia upping their game and Apple and Google uniting their skills — we’re starting to see, for better or worse, the asphalt that can pave our way there.

3. I’VE LONG BEEN FASCINATED WITH ATTEMPTS TO PREDICT THE OUTCOME OF BASKETBALL GAMES.

Perhaps it's just that I've been running a March Madness pool for the better part of two decades and faced, in that enterprise, people who are absolutely sure their picks will bear out. Call it clairvoyance, or just overconfidence, but many pool entrants hold an almost unconscious belief that they've cracked some part of the code.

Sure, many people will say they don't know anything and are donating their entrance fee. But most of us believe our choices are a little smarter than our friends'; otherwise why wouldn't we just copy their sheets? Ninety percent of drivers think they're better than average, and three-quarters of pool entrants believe they can finish in the money.

This leaves the question of whether machines and data processing can help that actually happen. The tournament this year brings a slew of entities who say it can. Even if you've never watched a college basketball game in your life, these efforts I think tell us something interesting about data and systems and predictability and, really, the knowability of our universe (not to put too fine a point on it).

Sports-betting site Rithmm says it has created "a cutting-edge tool" that will help you get more games right. "Enter the era of AI-generated March Madness brackets—a revolutionary approach that blends advanced analytics with the passion of college basketball."

A Virginia Tech class has gone all in on data to build new models. The Sporting News is using Elon Musk's Large Language Model Grok to make its picks, relying on Musk's pledge that "it has real-time access to info via the 𝕏 platform, which is a massive advantage over other models." (I'm not sure listening to a large number of Twitter posters will make you more intelligent, but no matter.)

And an AI data company named Akkio has for the past few months been touting what it can do in the March Madness space, noting that when it back-tested its model on the 2019 men’s tournament, it got the winner and three Final Four teams correct while Nate Silver "did not correctly predict the winner, and only correctly predicted one of the Final Four teams." (Owning Nate Silver, the AI world’s ultimate burn.)

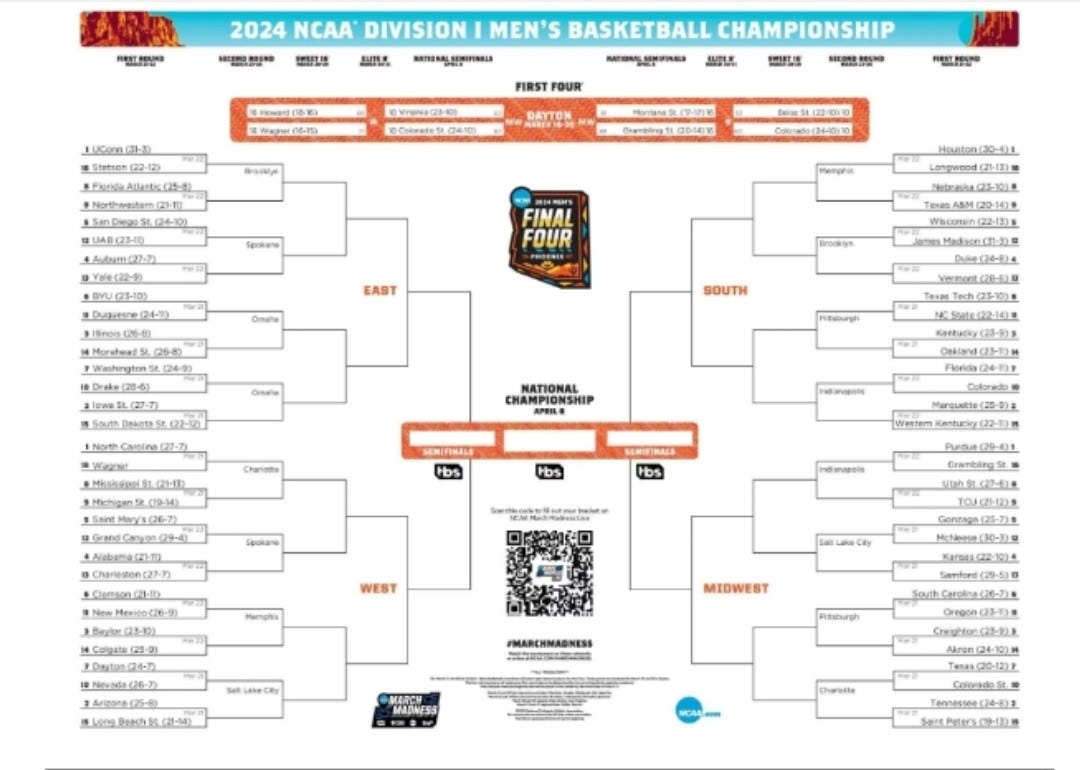

The most intriguing effort I've seen this year comes from the gambling site SportsbookReview, which took the assignment...very seriously. Site operators fired up GPT-4 and trained it on a massive amount of data, including teams’ performance, the Vegas odds, the historical trends for numeric seeds and the entirety of famous analytics expert Ken Pomeroy's number-crunching on the world of college basketball. They even tried to take into account the entropy of the tournament by instructing GPT-4 to make "plausible but entertaining" upset picks, because what is the tournament if not a field of entertaining upsets. And then they let it loose.

The men’s bracket it generated was actually more conservative than I would have imagined — and certainly more conservative than the outcomes of tournaments in recent years, in which seeds outside the top four regularly make deep runs. If you're in the philosophical camp that Large Language Models are not so much thinking independently as parroting back what we say so they appear smart, this won't dissuade you. (Added explanations about why the system made each pick are…not next-level analysis.)

Still, it did make some bold picks, like overlooked No. 9 seed Michigan State going to the Elite Eight and No 2. seed Marquette losing to No. 15 seed Western Kentucky in the first round. If it gets those right, some lauded human experts might need to pull a Werner Herzog and eat their own sheets.

If ever there was a time we could use AI in this realm, this is it. Because the data suggest that the men’s tournament IS getting harder for us to predict; that's not just hype or a feeling. In the 13 tournaments from 1997-2009, a team the NCAA committee seeded lower than four made the Final Four just six times, or about 12 percent. That means if you're trying to predict outcomes you can stick close to the chalk and be reasonably assured you won't be too far off.

But in the 13 tournaments since, a team seeded lower than four has made the Final Four seventeen times, or 32 percent of the time. Yes, nearly three times as many underdogs have been in the Final Four in recent years than the years prior.

Even more shocking is that a team from the straight-up bottom half of the field — seeded 9 or lower — made the Final Four just once in that 1997-2009 period. But in the tournaments since 2010 it's happened SIX times.

These are all due to fundamentally explainable inside-basketball factors related to programs' recruiting, funding, the infamous "transfer portal," and the one-and-done rule that came into effect in 2006, which by mandating every American male hoopster play a year in college or elsewhere, created the kind of entropy and opaqueness that made seedings much less accurate.

The question is: can an AI model restore that accuracy? That is, can an AI model understand what the human seeding committee does not and tell us which teams are overseeded and which are underseeded based on a magic-sauce machine formula?

Can it see through, say, 2023 Florida Atlantic as much better than a 9 seed or 2021 UCLA as much better than an 11 seed? (Both made the Final Four.) Or that the 2023 Purdue squad or 2018 Virginia team were not worthy of their No 1. seeds? (Both were eliminated in the first round.)

We are occupying a kind of inflection point as a civilization in our relationship with predictions, with a very different future lying over the horizon. For the past 20 years, quant types have argued that analytics can predict the outcome of athletes on a field of play — that people pecking out numbers in front of a laptop (like Nate Silver) can plausibly predict what burly athletes will do with a ball or puck. It's why we have OPS numbers of first-basemen and Corsi stats for left wings and pass-blocking win-rates for offensive lineman and other terms from your weird actuary friend. And by and large, these have been helpful tools. Certainly more helpful than not having them.

But we are now entering a new era in which the data, for the last decade sorted by computers but ultimately processed by humans, is potentially shifting over entirely to the machines.

Or put another way: the computers soon will not just give us the tools to make the decision — they'll make the decision for us.

The other day I had a debate with my dad about whether the stock market was more predictable than a March Madness bracket. He said it was; I laughed. The reality is both systems are working with massive amounts of data that, if filtered correctly, should ostensibly allow a machine to guide us to more likely outcomes than we could guide ourselves to. Not perfect. But better.

Of course part of the issue is that basketball (like the stock market) isn't played by machines, but by humans, who may have just broken up with someone, or are covering up an injury, or stayed out too late the night before. “All these things are art and science. And they’re just as much human psychology as they are statistics,” data analyst Chris Ford told the AP about the hurdles of AI March Madness. “You have to actually understand people. And that’s what’s so tricky about it.”

AI evangelists counter that the model already bakes this in — that if you simply feed in enough data it can take into account the psychology; after all, the humans played the games in the data too.

So here's the deal. Over the next two-and-a-half weeks I'm going to use the SportsbookReview GPT-4 model against the top people in my own pool. Better yet, I'll use it against the middle of the pack of my pool. (My pool actually adds extra points for upsets, incentivizing those picks, but I'm going to treat Sportsbook’s prompt of "plausible but entertaining" picks as achieving the same effect.)

And then we'll see what happens. We'll see whether human instinct can beat the AI model. We'll see what juice people with access to some good old-fashioned math models (and other human pundits) still have when making these decisions. And how they stack up to AI.

Stay tuned. We might get a win for the humans. Or we might, like one of those historically dejected Kansas teams, have to bow our heads in the end and acknowledge that, on this court, the machines simply play a better game.

The Mind and Iron Totally Scientific Apocalypse Score

Every week we bring you the TSAS — the TOTALLY SCIENTIFIC APOCALYPSE SCORE (tm). It’s a barometer of the biggest future-world news of the week, from a sink-to-our-doom -5 or -6 to a life-is-great +5 or +6 the other way. Last year ended with a score of -21.5 — gulp. Can 2024 do better? It started off strong but now we’re sliding.

AI SAYS IT’S NOT RACIST BUT SECRETLY IS: -5

MORE POWERFUL CHIPS AND AI IN OUR POCKETS: Depends on what it’s used for. +1

AI COULD CRACK OUR SPORTS CODE: +1.5