Mind and Iron: Synthetic videos may soon kill us all

Also, Peter Thiel keeps trying to take our information. And a real-world Jurassic Park reboot?

Hi and welcome to Mind and Iron, the tech-media hotspot where the humans are in charge. I'm Steve Zeitchik, veteran newspaper reporter and occasional human cultural-decoder ring.

Tech moves pretty fast. If you don't stop and look around once in a while, you could miss the fact that your friend is now an AI. So we’re here to help you out.

If you’re a regular subscriber to our Thursday missives, it’s great to have you back. If you’re a visitor/email-forwardee, feel free to become a regular. Join and behold all the decipherments of our fast-changing tech world — free! all free! (for the moment) — so sign up and keep your soul nourished.

And if you like what you see and don't want to miss anything when the paywall drops, just hit this pledge button. No commitment now, just a future allocation of a few yen to keep these rolling in (and to offer a little support for what I’m doing).

First, our future-world quote of the week:

“One can halt life and then start it from the beginning.”

—Researcher Teymuras Kurzchalia, after his team discovered that they could revive worms that had been frozen for 46,000 years

It may be August, but future-world still pedals furiously. In this week’s installment, medical chatbots reveal their true danger; that magical roundworm resurrects itself; and the stock price of Peter Thiel’s scary DoD-contracted software company keeps rising, because what is capitalism if not the chance to make money off a secretive tech firm digging into our personal data on behalf of the U.S. government?

Also, after talking to the so-called “digital twin” of the K-pop star Mark Tuan a few weeks ago, the real one got back to us and told us what he thought of his clone.

At least we think he’s the real one.

Let’s get to the messy business of building the future!

IronSupplement

Everything you do — and don’t — need to know in future-world this week

Peter Thiel wants your data; medical chatbots go on the juice; Jurassic Park worms a little closer

1. THE KIND OF PEOPLE WHO WATCH TECH STOCK PRICES like it’s the line on Patrick Mahomes’ 2023 MVP odds went a little gaga last week when the new-era software firm Palantir saw its stock price rise more than 10 percent on Friday alone.

A prominent analyst called the company “The Messi of AI” (nice phrase, what’s it mean), prompting investors to scurry to their E*Trade app and the financial self-help press to fall over itself. (The Motley Fool: Palantir is “uniquely positioned to benefit from the accelerating demand for AI.”) Palantir’s stock price has now nearly tripled since the start of the year.

The Peter Thiel-backed company, which went public a few years ago, says its main objective is to, you know, just help us out: “Palantir empowers intelligence agencies to securely derive actionable insights from sensitive data and achieve their most challenging operational objectives.”

Good turn of bureaucrat-ese. Here’s what the firm really does — mine data to try to unearth criminals from the vast soil on which many of us live our lives.

When it works, it can be ethically questionable, with Palantir’s algorithms used for such harmless tasks as separating families at the border. (The company contracts with groups like ICE, that bastion of scrupulous ethics. “Not only do the tech companies provide access to the sensitive personal information used to destroy communities, they undermine the rights” of everyday people, the ACLU has warned.) Across the pond, Palantir has stirred the pot by entering data-sharing agreements with NHS, prompting both sides to backpedal.

And that’s when it works. A 2021 Intercept investigation into the use of Palantir’s software for the LAPD documented multiple holes in the system.

“Up close, the software was only as good as the people maintaining and using it,” the site found. “To make sense of Palantir Gotham’s data, police often need input from engineers, some of whom are provided by Palantir…..At various points in the search, he made assumptions that could easily throw off the result.” (The ACLU also convincingly argues it doesn't stop terrorism.)

And all apologies to Wall Street analysts, but it's highly unclear how the new wave of generative AI will vault Palantir over these hurdles, beyond the general burble that AI will just kind of make computers, you know, smarter and everything. If chief executive Alex Karp knows, he's not handing out specifics. (He’s mainly said things like Palantir’s AI is “a weapon that will allow you to win, that will scare your competitors and adversaries.”)

Look, it’s nice to imagine a world in which all bad guys are rooted out by large-language models. The reality is often more complicated, sticky and algorithm-resistant. And even when Palantir does help law enforcement, that doesn’t mean its worth the cost of an entire society’s personal information plugged into a giant searchable database for a midlevel government employee to access at the flick of a finger.

This hasn’t stopped the company from waving the flag on all it has supposedly achieved. “With Palantir, investigators are uncovering human trafficking rings, finding exploited children, and unraveling complex financial crimes” it boasts.

And it won’t stop government agencies from buying what Palantir is selling — literally. The company is currently in the middle of an $820 million Department of Defense contract and just a few months ago landed a $100 million pact with the State Department. Oh yeah, it’s funded in part by the CIA's VC arm.

Fearful that criminals will be using AI — or simply wanting to seem high-tech themselves — more law-enforcement and intelligence agencies will be contracting the services of data miners like Palantir in the years ahead. This means a lot more poking into our information, mining the movements and actions of innocent people, and sharing that data with government agencies who have questionable legal right to it.

In a true irony, Karp wrote an op-ed in the NY Times last week warning of “our Oppenheimer Moment: The Creation of A.I. Weapons,” which is a little like The Riddler running around town telling everyone what a threat The Joker is. In reality AI weapons and data-mining gone amok are both threats, and maybe we should let the people who aren’t involved with either of them be in charge of their safety.

[The Intercept, Fortune and ProPublica]

2. IN A TIME OF DIVISIVENESS, AT LEAST WE HAVE MARTIN SHKRELI TO UNITE US. The paroled “Pharma Bro,” who has been trying like mad to get traction for his medical chatbot (“Dr. Gupta” — you don't want to know, nor do you need to) resurfaced Wednesday when he got into a Twitter war with Gary Marcus, an actual AI expert and professor.

Shkreli, who has been touting Dr. Gupta for several months to little effect, first called out another AI expert, the Montreal-based Sasha Luccioni. She had reasonably noted that large-language models “shouldn't be used to give medical advice. Who will be held accountable when things inevitably go sideways?"

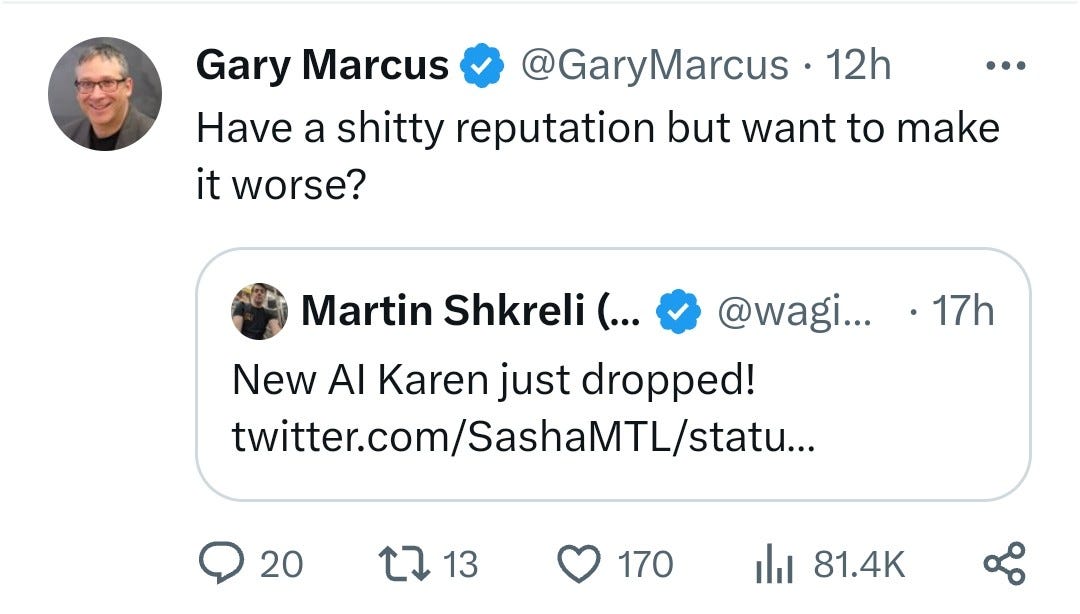

Shkreli unreasonably called her an "AI Karen," prompting Marcus to reasonably say the below.

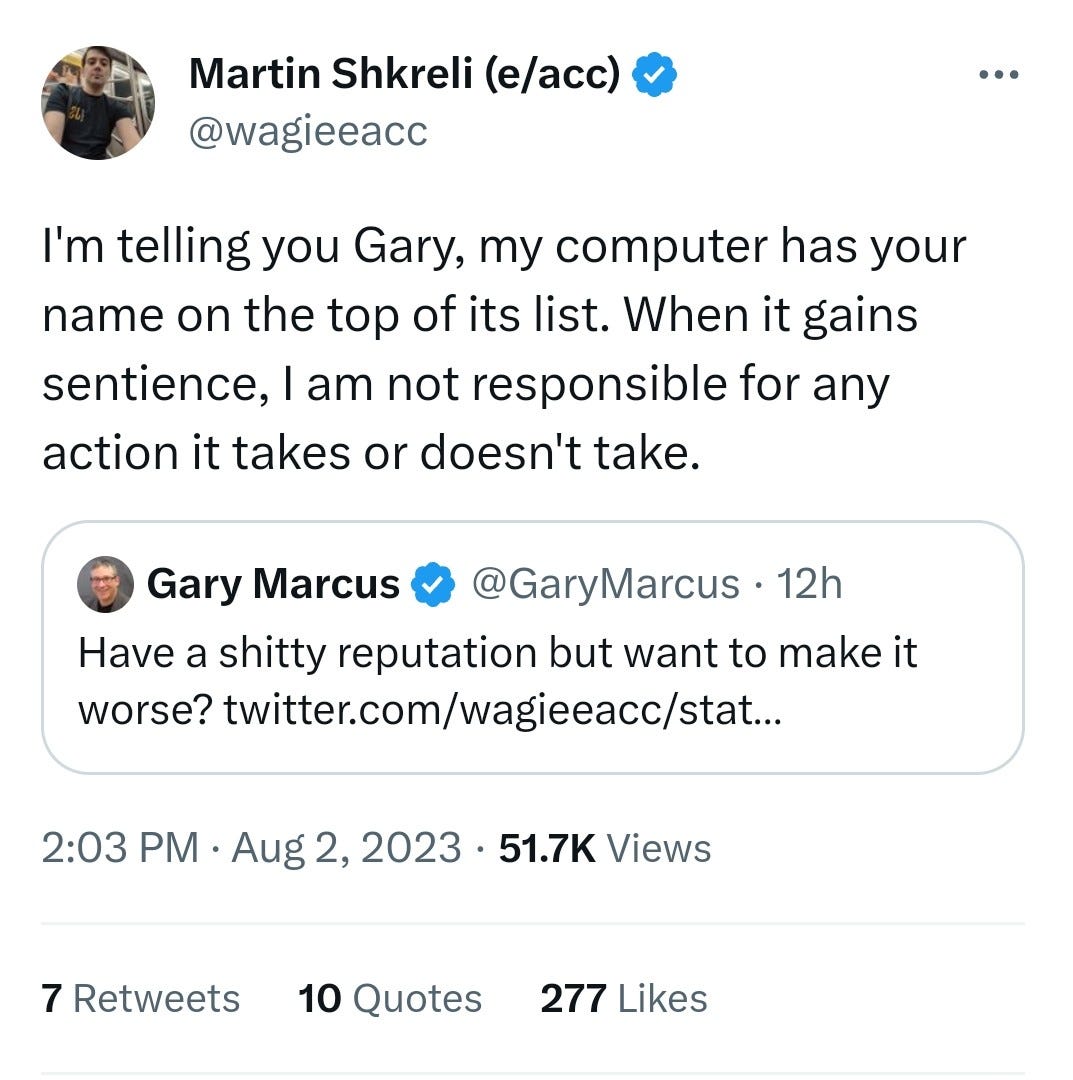

Then Shkreli responded with a threat-but-it's-a-joke-but-it's-a-threat —

What Shkreli is doing with Dr. Gupta isn’t noteworthy — I've played with the tool and it basically just sends you generic symptom information with a few very specific articles that are unlikely to be relevant to your condition. You're better off Googling, and that's saying something.

(In a message to me Marcus noted that “large-language models absorb a ton of information from the Web and probably from things like purchased medical licensing exams. That means they do well on licensing exams; it doesn’t mean they are good doctors.”)

But I think what lies at the core of Shkreli’s trolling is in fact something to be taken seriously. His comments hint at how AI can and will be used against doctors — willful ignorance dressed up as patient empowerment. Right now Google is more or less a neutral force when it comes to armchair diagnoses — it certainly leads some people astray, but it also makes sure some questions are asked that maybe a harried clinician skips over. Fine enough.

But once these chatbots start getting powerful enough to sound human, and thus authoritative, I think the game changes. For one thing, some people are really led astray (we’re talking disastrously-take-the-wrong meds astray).

Even more fundamentally, medical diagnoses get weaponized. Individuals who don't like a diagnosis — or societies that don't like a health policy — can invoke (or manipulate) AI to support a claim. And since AI will actually be more knowledgeable than humans in many realms, people will naturally think that they are always right in medical instances, even in cases when they are definitively wrong.

You'd think everyone who’s concerned about their health would essentially privilege doctors over whatever devils-advocacy is being dredged up online, but then if you thought that you didn’t live through the pandemic.

Tl; dr, a lot of what awaits us is more covid-style misinformation, only now aided and abetted by a manipulable and authoritative-sounding computer system. Fun times.

Hey, AI is going to majorly transform medicine in areas like research and even diagnostics. When handled — and it's a a big “when handled”— by people who know what they're doing. Or have proved out their use-case via studies. But this Dr. Google on steroids is a nightmare.

Anyway if you want to read something Marcus wrote that is especially interesting (and blissfully doesn't involve Martin Shkreli), check out this new paper he co-authored on the communication limits of current large-language models. It’s fascinating — and actually deals with what AI can do, as opposed to what people want it to do.

3. AND YOU THOUGHT YOU WERE OVER THE JURASSIC PARK REBOOTS.

Back in 2018 scientists in Russia thawed out roundworms that had become frozen in the Siberian permafrost. Pull them out, put them in water, and bam — they’re back and wriggling again like the sea monkeys you almost ordered from the back of that comic book (that’s one for our Gen X and Boomer readers).

The worms lived out their normal lifespan (a few days), got about to reproducing (several generations) and somehow this unassuming creature that was around when woolly mammoths roamed the earth 46,000 years ago was back at it without missing a beat. This was all summed up and revealed in an article published in the journal PLOS Genetics late last week.

There’s actually a debate over whether the creatures were really that old — a Brigham Young biologist named Byron Adams told Scientific American he wondered why the worms themselves were not carbon-dated, only the nearby flora. Even so, much of the science world has been marveling at how this happened. It’s part of a mysterious and little-understood process called cryptobiosis. That’s when organisms under stress in extreme conditions basically manage to pause life indefinitely so they can survive.

There’s something almost fairy tale-like about creatures long thought dead simply springing up again as if nothing had happened (one of the authors invoked Sleeping Beauty).

But the implications — for everything from a planet facing extinctive threats to Gwyneth Paltrow-ian quests for immortality — are mad significant. The findings suggest a stoppage of life’s deteriorations that, Oscar Wilde novels aside, contradicts everything we feel and know about aging. As that researcher above said, “One can halt life and then start it from the beginning.”

Also weighty is the idea (and this part equally blows my mind) that creatures long thought extinct could just up and return because, unbeknownst to us, they’ve been hiding in the back of the freezer all along.

“Our findings are essential because….the long-term survival of a a species’ individuals can result in the remerges of lineages that could otherwise have gone extinct,” said Philipp Schiffer, one of the study’s authors.

A lot of debate has attended the question of whether creatures like the woolly mammoth should be revived in the lab (for biodiversity, not an amusement park visited by Jeff Goldblum). But this week’s roundworm news raises another tantalizing possibility: As a climate-changing Earth grapples with frighteningly accelerating extinctions, species we’ve long thought gone may be here and just waiting for a good defrosting.

[Scientific American, CNN and PLOS Genetics]

MindF#ck

Notes from the Future’s Edge

Revisiting Mark Tuan (the real one)

So a few weeks ago Mind and Iron talked to the newly launched “digital twin” of the K-pop star Mark Tuan, the most elaborate, GPT-driven alter ego of a celebrity yet devised. As you can read here, it was an enlivening conversation, one in which the young musician didn’t just tell us how much he loves his fans, that old Bieberian chestnut, but talked about his travels, his experiences with racism, his feelings on AI.

(“It can be a good thing, with new and interesting work opportunities,” he said on the latter. “But while it can be beneficial it can be used to create content that’s not accurate or true.”)

At the time, I’d sent the actual Mark Tuan some questions about how he felt that a machine-generated twin was out there and running around looking like him and talking like him — how he felt about what he’d rather daringly created, referred to in some tech circles as synthetic media.

He took a minute to get back to me. This past weekend he did. It was a telling experience.

Here’s a sample of what he said.

Mind and Iron: This feels very cool and pioneering. What prompted you to want to become one of the first full-fledged celebrity digital twins?

Mark Tuan: I’m really interested in technology and I’m always trying to find more ways to connect with my fans. What was really interesting to me about a Digital Twin, and specifically working with [tech partner] Soul Machines, is that the technology provides my fans with a one-one, real-time connection. While I’m sure my fans will always prefer to talk to me in person or via Zoom, that’s not possible all the time. I think my Digital Twin is a good alternative for my fans that lets them talk to digital me whenever they want.

M&I: I was impressed with how authentic-seeming it was, especially the responses. Was that something you actively sought, as opposed to a more limited script-based approach?

MT: I wouldn’t want to offer an experience to my fans if I didn’t believe that it would ultimately allow them to feel closer to me. It was important to me to ensure the experience was as authentic as possible to who I am.

M&I: Do you see this as the future of fandom, everybody having private conversations with their idols? And how does that make you feel, this idea that so many people can feel like they're talking to you without you even knowing they're talking to you?

MT: We’re just at the beginning of this technology so we don’t know exactly where it’s going to go. I like the idea of my fans being able to enjoy themselves or feel closer to me by talking to my Digital Twin.

One thing that struck me is how….not different the responses of the real-world Tuan were from the responses of Tuan Twin.

Now, that may be more a function of how guarded the man was than how supple his AI is. But this is a distinction without a difference. Because if the question is whether public personalities can effectively be mimicked by machines in real-world interactions, the Tuan Twin answer is a decided yes.

Where that leaves us as a society that makes hard-core distinctions — that needs to make hard-core distinctions — between what the human said and what the machine said is in an uncertain place indeed. To put it mildly. This kind of tech will completely conflate and confuse in the collective mind what public figures — from the goofiest celebrity to the most influential politician — actually said with what a twin can be induced to say.

“The pieces are coming together to create an end-to-end version of a celebrity, news-anchor, or politician,” Hany Farid, a UC Berkeley professor who has been researching and warning about the threats these synthetic videos pose, said to me in an email. He noted that researchers like him are diving in to “understand where the tech is and how to defend against it.”

There is undoubtedly something nifty, even culturally transforming, about the idea of conversing in real-time with someone famous, and my mind goes to thrilling places about our relationship with our heroes and our children growing up in a world in which they can casually talk to their most beloved idols like they’re chatting with the neighbor.

But there is also something abjectly terrifying about a video in which a public figure is on our phone convincingly saying something they never said and don’t even know is being said on their behalf. Whether this is weaponized by misinformation-sowing bad actors or just incidentally creating tons of reality-warping confusion, it’s a cataclysm waiting to happen.

I asked the real Tuan a variation on this whopper and he left it unanswered (though spoke volumes with his silence). “Are you concerned about people mistaking this for actually talking to you, or even citing your responses as if it is you?” I said to him.

He replied: “I think it is important that fans know that this is just for fun and isn’t the real me. That’s why we made it clear that this is just a digital version of me and that not everything he says is true. I have seen fans post videos of Digital Mark saying false information like I’m going on tour to a specific country but Soul Machines has put guardrails in the background preventing Digital Mark from saying anything too out there. We’re just at the beginning of this technology and process so it’s a learning experience that will only get better as we go.”

So, yes, he is concerned. As we all should be.