Mind and Iron: When media companies come for AI

The NY Times lawsuit is likely just the start. And meet the Harvard undergrad who is practicing a kind of human AI for the war in Gaza.

Hi and welcome back from a (much-needed) holiday break. Hope your time away, if you were lucky enough to get some, was sweet.

I'm Steve Zeitchik, veteran of The Washington Post and Los Angeles Times and head ski instructor on this snowy mountain. Every Thursday we aim to bring you all the tech and future news that a human should know, emphasis on the human.

Please sign up here if you’ve gotten this as a forward.

And please consider a pledge of a few dollars here. It will get you all the Mind and Iron you could ever want and support our important mission besides.

We at M&I headquarters were happy to take a minute and skip last Thursday’s issue. And wouldn't you know it — on Wednesday massive news went down. The New York Times sued Open AI and Microsoft for copyright infringement, seeking billions in damages. We'll catch you up on all you need to know about this titanic event.

We'll also tell you about a Harvard student who is savvily using tech to increase humanity instead of undermine it — hey, positive stories, we’re feeling the optimism in 2024 (four days in).

Also, what did people 100 years ago think their future would look like? We crank up the wayback machine for some telling/funny projections.

And new year, new apocalypse counter. We wipe the slate clean on all the doom from last year to see how 2024 is doing in its quest to inch us toward a better world — or at least away from a tech-enabled abyss. Yes, it's the return of the Totally Scientific Apocalypse Score, starting blissfully at zero.

First, the future-world quote of the week:

"Even though we're trying to keep our bias out of it, that doesn’t mean keeping empathy or emotional concern for other people out of it. And empathy is not something ChatGPT can really do.”

—Harvard student Shira Hoffer, who has founded a new tech platform about the conflict in the Middle East, on why the humans beat the machines

Let's get to the messy business of building the future.

IronSupplement

Everything you do — and don’t — need to know in future-world this week

The NY Times vs OpenAI; the other Harvard news worth hearing this week; what 2024 looked like a hundred years back

1. A long time ago, a shady oracle had written this about a lawsuit brought by the Hollywood personality Sarah Silverman.

“AI relies on material that is deeply proprietary, and Silverman & Company's willingness to take a stand is only the beginning. Tech companies need creative entities (as much as they like to pretend they don't), which means this is only the first of what will be many salvos in their power struggle.”

OK, the shady oracle was this newsletter. And the distant time was December 21. On December 27, the New York Times sued OpenAI and Microsoft over their ChatGPT and Copilot apps, respectively, in the explosive next phase of this struggle.

The lawsuit (you can read it here) alleges copyright infringement. The Times says the services used of millions of copyrighted NYT articles to train their programs without seeking permission or making payments, and now peddles the NYT-heavy results, essentially offering people the chance to read Times articles without paying for them.

“Times journalists go where the story is, often at great risk and cost, to inform the public about important and pressing issues. They bear witness to conflict and disasters, provide accountability for the use of power, and illuminate truths that would otherwise go unseen,” the complaint said. “[Yet] defendants seek to free-ride on The Times’s massive investment in its journalism by using it to build substitutive products without permission or payment….[and] undermine and damage The Times’s relationship with its readers and deprive The Times of subscription, licensing, advertising, and affiliate revenue.”

The paper said it had been trying to negotiate with the companies for months. As far back as last spring, just a few months into the popularity of ChatGPT, Times representatives reached out to OpenAI and Microsoft seeking an agreement. “These negotiations have not led to a resolution,” the complaint noted tartly.

AI companies argue that the content their models churn out is “transformative” — a legal exemption to infringement, born 30 years ago, that says that if the alleged infringer adds "new expression, meaning, or message" then the portions of the original in the new work could be considered fair use.

The lawsuit, at least, is having none of it. “There is nothing ‘transformative’ about using The Times’s content without payment to create products that substitute for The Times and steal audiences away from it.” The paper alleges damages in the billions.

[The suit is part of a larger culture of skepticism between the two industries. Though once in the throes of courtship, news and tech companies have been drifting apart for years — the latter especially questioning whether the added traction from news links is worth the polarization headaches. (Now they just have other headaches.) The result has been social-media platforms delivering far fewer readers to news outlets — they’re responsible for only half as much of news sites’ overall traffic as they were three years ago.]

The lawsuit says out loud what has been obvious for some time: Feeding someone's copyrighted content into a machine so you can offer what comes out as your own for-profit product may not be very legal, no matter how much computer heebie-jeebie is performed on that content in-between.

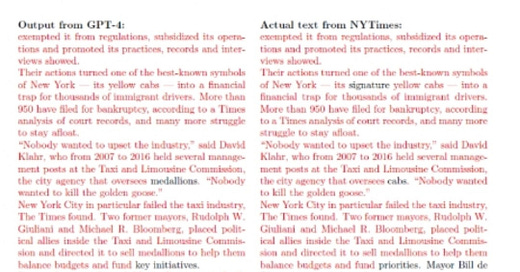

Here’s a sample of a ChatGPT response about a taxi-driver exploitation scandal that the New York Times broke in an epic investigation. (The different words are in gray; everything else is identical.)

OK, so this may be aberrant; ChatGPT doesn’t always respond to questions with unexpurgated blocks of text from the original (though man, that seems like a fixable bug). But what if a third of the text is changed? Or half? At what point does the new response become “transformative?” And even if there are a lot of new words to go along with the old ones, if those new words are just inadequate substitutes what makes this different from any cheap plagiarism, where the plagiarizer seeks to mask their sins with synonyms?

What the lawsuit unearths is an uncomfortable paradox at the heart of OpenAI and large language models: Producing text that sounds original and unique is what makes ChatGPT more compelling than the generic nothingness that text-based AI produced for years. But to pull that off a model needs to rely more on the original and unique voices in the first place, which lays bare its infringement.

(The complaint also includes some hallucination howlers; to wit, “a GPT model completely fabricated that The New York Times published an article on January 10, 2020, titled ‘Study Finds Possible Link between Orange Juice and Non-Hodgkin’s Lymphoma.’ The Times never published such an article.”)

The suit strikes a blow for anyone who looks at what these models are spitting out and, instead of simple awe, reasonably asks, "didn't someone have to create the knowledge base it's drawing from?"

The Authors Guild and some pretty famous writers said exactly that in September, filing their own version of this suit. And of course there was Silverman in July. But in practical terms the Times case is more consequential. That’s true both for the tech companies (who can skate by without a George R.R. Martin book a lot more easily than they can the country’s leading newspaper). And it’s true for the NY Times. Consumers are unlikely to have AI write a book from their favorite author; the love is too specific. But they could easily use chatbots to displace news outlets.

(Btw chatbots at the moment can’t really offer breaking news; training takes time. This is more of an archive concern.)

We’ll get further into the legal merits in a future issue. But let’s consider an intriguing hypothetical: what happens if the Times (and others that inevitably join the cause) win? What does it do to the future of news? And AI?

How will we transact with the digital world if AI can’t use the country’s biggest news outlets? Because if the NYT is successful in stopping OpenAI’s practices it will drastically change how these models are built. There's a big difference between a large language model that uses the most important media outlets of our time and one that doesn’t.

Already some were preparing the obituaries. “Generative AI is about to become a s**t show, posted the AI expert Gary Marcus, who in several X posts compared what was happening to OpenAI now with what happened to Napster circa 2000 — a massive bubble popped by a judge soon leading to the company’s decline.

He also argued that it isn’t just ChatGPT and text; these issues plague image-generators too. When Marcus and the Hollywood visual artist Reid Southen had OpenAI’s Dall-E casually produce “Star Wars” content, it came up with stuff eerily similar to the real thing. Here’s what it looked like. (Original on right)

Pretty startling.

So yes, it’s reasonable to think that a judge can lower the boom and cause a Napster-like unraveling.

Of course that's assuming no one here wants to play ball with OpenAI. Ultimately I think media and tech companies will end up where they should have been all along — in content-licensing deals. News outlets — suffering precisely because of the traffic dips caused by the Silicon Valley divorce —need this licensing money. Stands are nice. Survival is better.

So OpenAI may look like Napster now but will end up as Spotify. And we’ll end up with outside entities getting paid for what goes into the AI blender.

Indeed, just a few days before the suit, the NY Times reported that Apple was talking to publishers about paying them as much as $50 million to allow their content to be fed into AI. (Good news, but will they share that windfall with reporters, who will be powering the service that powers the world?)

So a successful NY Times lawsuit will probably not, in the end, stop AI’s takeover as our content gatekeeper — it will just shift us to a payout system.

We have a long way to go. The suits need to progress (the Silverman case has seen a number of its claims thrown out by a judge); they have to be won; and it all has to lead to big enough deals for the people creating the content.

And that last point is crucial. Because if the pivot from original news sources to AI chatbots actually turns out like music streaming there really isn’t that much to celebrate. As anyone who’s followed the music biz knows, the shift from album sales to streaming suddenly meant there was a lot less money to go around for creators. Monthly subscribers (in part thanks to Napster) weren’t willing to pay nearly as much per song as they did when they spent $15 on an album. Plus there was now another giant intermediary to share revenue with. For those that produce the journalism/art, this could be a pennies-on-the-dollar-situation.

But for now there’s at least this piece of good news: Big media brands are recognizing that all the words and images that creative people produce can’t just be snatched up by Silicon Valley and put in the AI thresher for free. And these entities are willing to go to court to back up that belief.

2. The war in Gaza has shown the worst of what technology could be — disinformation, polarization and depression-inducing doomscrolling have been just some of its lovely contributions.

But a student at Harvard is ingenuously showing how technology can actually be additive to a conflict that has taken or destroyed the lives of so many people. I don't think I’ve come across too many projects that better embody the goals of those of us who seek to deploy tech in service of our humanity.

Shira Hoffer, a junior at the university, recently came up with the “Hotline For Israel/Palestine.” The service is a text-based number — like, you can message it now at 617-313-2125 — where volunteers receive questions and then dispatch them to the person best equipped to answer. That volunteer then furnishes information and relevant sources without offering any opinion (and even keeping the analysis to a minimum).

The goal is to educate in a realm where passions can be high but knowledge scant – a kind of exercise in radical neutrality that the world urgently needs more of.

Launched just two months ago, Hoffer and a team of 18 volunteers from North America to the Middle East (mostly non-students between the ages of 30 and 50) have already responded to some 400 threads, on everything from the water situation in Gaza to the definitions of terrorist and apartheid.

The hotline also offers op-eds that show a range of perspectives, from the Jerusalem Post to Al Jazeera to many think-tank papers in-between. Hoffer’s goal is not just to get people to know something they never learned but absorb a point-of-view they’ve never heard.

A social studies and religion major who also works as a mediator at a local courthouse, Hoffer, 22, came up with the idea for the hotline in mid-October. She had just sent a statement from then-president Claudine Gay on the situation to her dorm’s listserv, adding her contact info for anyone who wanted to talk about what was happening. Hoffer got a strong response, and soon she had devised the hotline, essentially universalizing the opportunity.

“It's not super-popular these days, especially among college students, to be interested in hearing the opposing view, especially if you think that opposing view is morally wrong or in some ways violent. And violence now can include something you just disagree with,” Hoffer said when I Zoomed with her this week. “Right now it’s cool to say ‘let’s de-platform this person because they’re doing violence to my community.’ And I don’t mean to be disrespectful to the people who feel this way but I don’t think that’s the way to learn.”

The goal with the hotline is not really to create a community or dialogue. It’s more the step before all that — to educate people about information or perspectives they might lack in our balkanized world so that they can then go and be a better participant in that dialogue.

Not lost on Hoffer is that she’s trying to do this using technology, which in many ways is responsible for the balkanization in the first place.

“I don’t think this is how the Internet is usually used,” laughed Hoffer, who grew up in Hanover, NH. “We usually just go to the source we agree with.” After the election of Donald Trump in 2016 her father set his homepage so that both CNN and Fox News pop up simultaneously. There was plenty of understand-both-sides modeled in the Hoffer home.

Hoffer’s project can’t but recall another Harvard student who two decades ago used the Internet to bring people together (and eventually split apart). Though given where Facebook went, perhaps the better aspiration here is Wikipedia —another nonprofit, also relying on human volunteers, that has become one of the few online information sources trusted by people of all stripes.

I tried out the service. It was weirdly intimate, like texting a very Middle East-knowledgeable friend, if at times a little stiff.

Here’s what I asked it on Tuesday afternoon:

“Hi. I saw the news today that Israel killed a senior member of Hamas in Beirut and am struggling to figure out what it means. Is this likely to dial down hostilities between the two sides (based on past targeted killings) or make things worse? And what effect could this have on larger stability in the region given the leader's ties to Hezbollah? Thanks very much for the service you provide.” (Reporters and their multi-part questions, sheesh.)

By Wednesday morning I got this back.

“Hi. Your question refers to the policy of targeted killings undertaken by the IDF. Of course, this is not a new policy. This analysis from The Guardian was published about 2 weeks ago.

“It certainly seems likely that tensions are rising. At a memorial service for an Iranian general killed by a US drone strike 3 years ago, 2 bombs went off on Wednesday, killing over 100 people. This report is from France24. Stay tuned, and keep in touch.”

I pressed with a follow-up seeking to clarify the Gaza situation specifically and got something a little more definitive.

“It's an interesting question. The strategy is not designed to lessen the crisis. The IDF is moving some armored and infantry divisions out of Gaza. Maybe there will be some easing of the civilian casualties. But further war in Lebanon seems likely.” And then they cited a Middle East News Agency story.

What was striking to me is how straightforwardly useful it all was. I might well have come across these stories surfing around X or Threads. But the way their algorithms work, those platforms are more likely to show me mainly outlets/posters that I agree or have engaged with. But, free of all knowledge about me or what it thought I wanted to hear (or get mad about), this human-centric platform simply relayed the information it thought would most enrich me.

Sure, it uses technology — an elaborate customer-service system allows text messages on the back-end to flow through to the right volunteer. But when it comes to tech, Hoffer’s creation may also be notable for what it doesn’t do: rely on machines to decide what’s in our interest.

Or put another way, by injecting a human element into a tech platform, it returned the Internet to what it once was and many of us wish it could also be again — a place we go not just to get angry or find solidarity but to learn. Optimizing not for engagement but for education.

Still, I did wonder if something like ChatGPT might have a role here. After all, the people responding to the hotline were still human, susceptible to their own baggage. Wouldn’t this be the rare case where taking our humanity out of the equation in favor of a more dispassionate AI, flawed as it might be, would be a good idea?

I asked Hoffer this. She had a potent answer. “Even though we're trying to keep our bias out of it, that doesn’t mean keeping empathy or emotional concern for other people out of it. And empathy is not something ChatGPT can really do.” She cited instances where a questioner marched in with a swaggering point-of-view but still showed glints of a willingness to learn, and a volunteer had to figure out how to phrase the response to reach them.

“I don’t know that ChatGPT would have known how to handle that,” she said.

The lesson is hard to deny: no matter how much we use tech to foster conversation (AI here could guide the volunteers, for instance), put humans at the center and you end up with a lot more sympathy and understanding. Leave them out and we continue to free-fall.

Notably, while Hoffer said she’s gotten no pushback from Harvard students or faculty, she hasn’t received a ton of overt support either. She can’t say for certain, but she suspects most of the questions come from off-campus.

“I think a lot of Harvard students feel like they have moral clarity on a lot of different issues,” she said. “And if you feel you have moral clarity there’s not a great reason to learn. You’re not going to ask the hotline questions because you don’t actually think you have any questions.”

This hasn’t deterred her from pushing ahead. She’s in the process of turning her enterprise into a formal nonprofit, with the goal of extending it to other topic areas (she incorporated as the “Institute for Multipartisan Education”). The hotline seems scalable to so many areas — elections, legislation, social debates, legal rulings — where people are encouraged to take a position without really knowing what they’re supporting.

She’s also developed a newsletter and begun working with a number of high schools to help implement this system and ethos among their students.

If her mission takes off, the next wave of kids coming to Harvard may have a lot more curiosity to go along with all that clarity.

3. We spend a lot of time in the tech-future space trying to figure out where society is headed over the next year, or ten years, or 100 years.

Certainly these early January days are all about people making forecasts, and this space is not immune to the scourge. (We’ll have a few of them from top futurists and thought leaders in the coming weeks.)

But these projections are really best made when we understand where we’ve gone right and wrong making them before. Only history can hone our futurism.

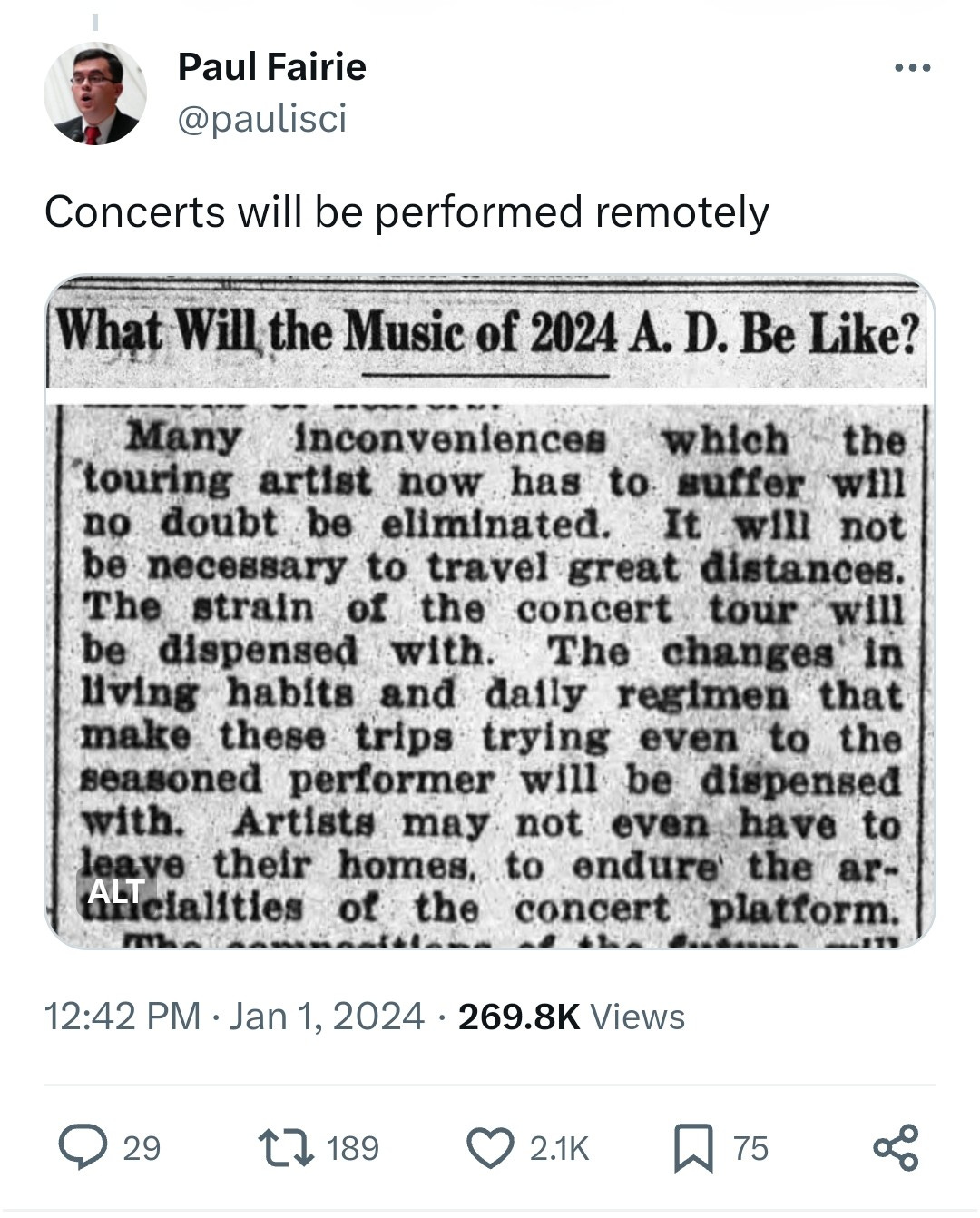

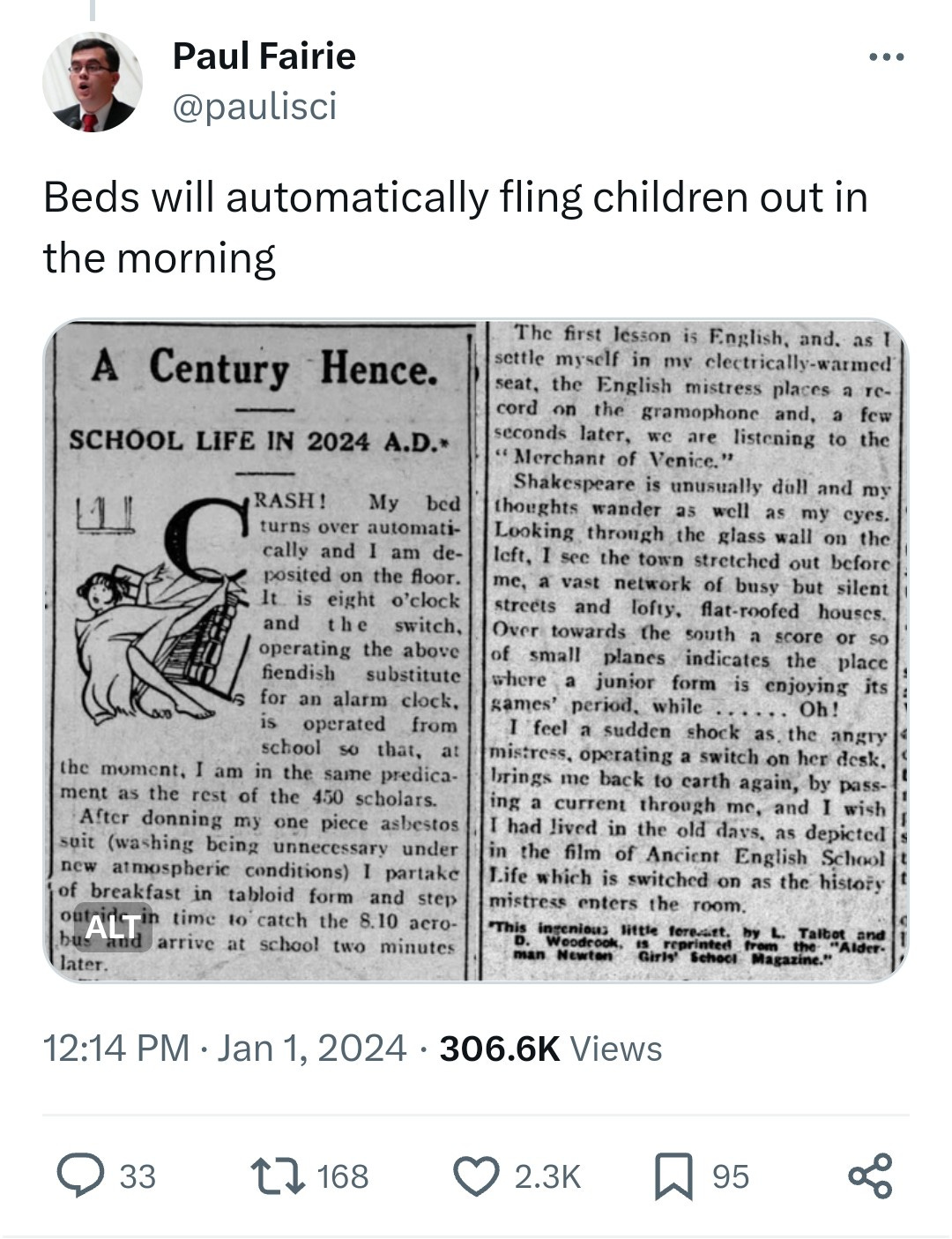

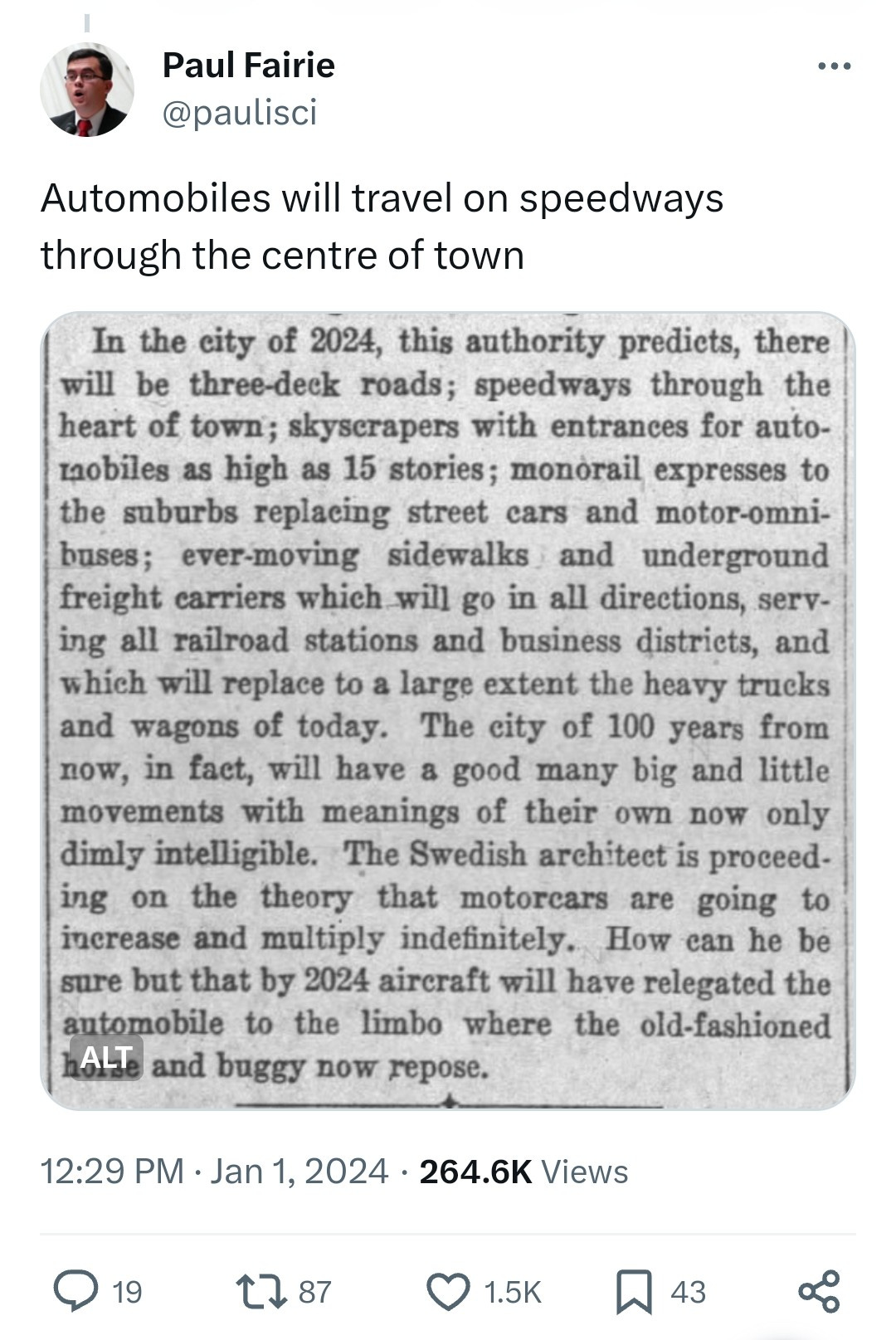

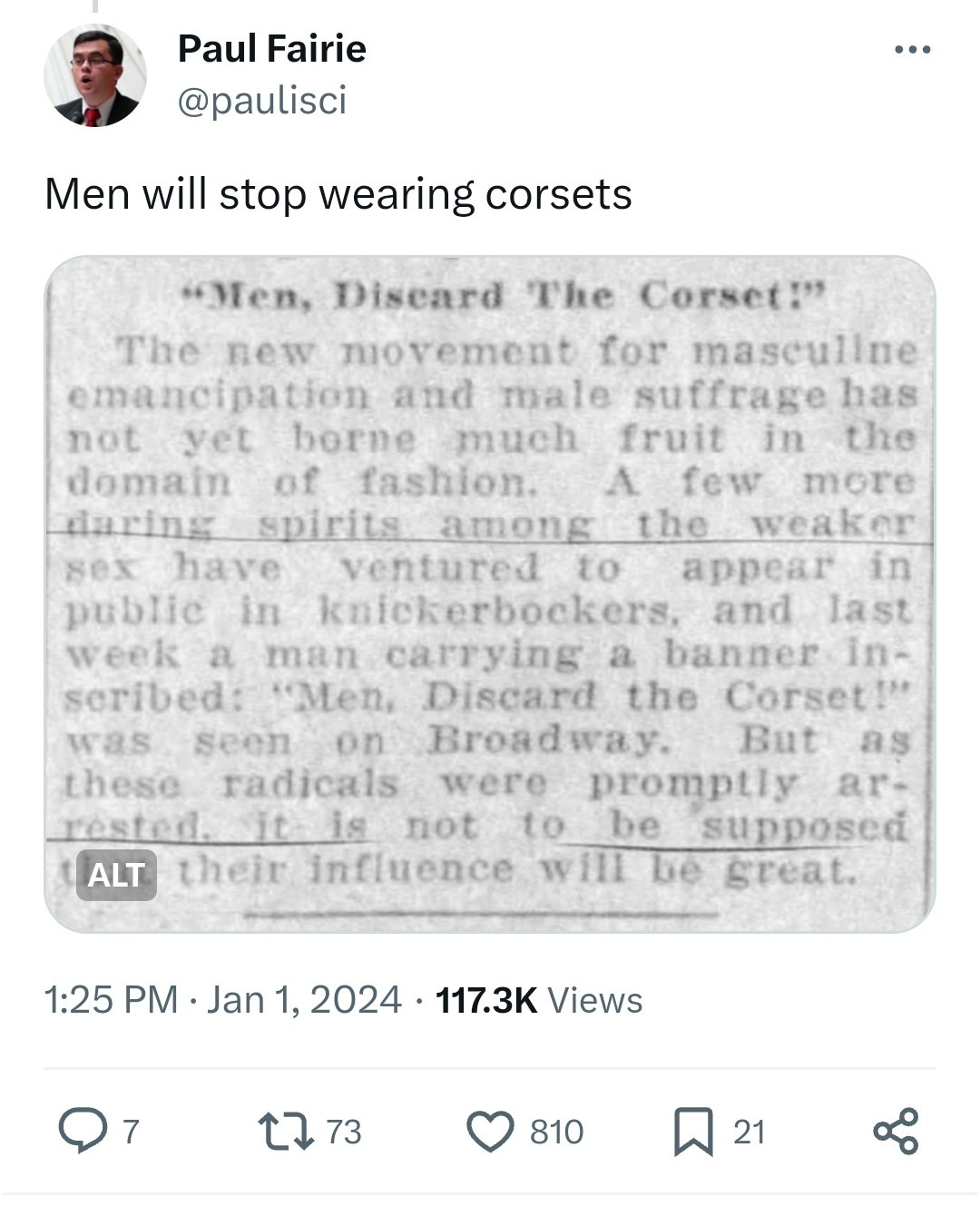

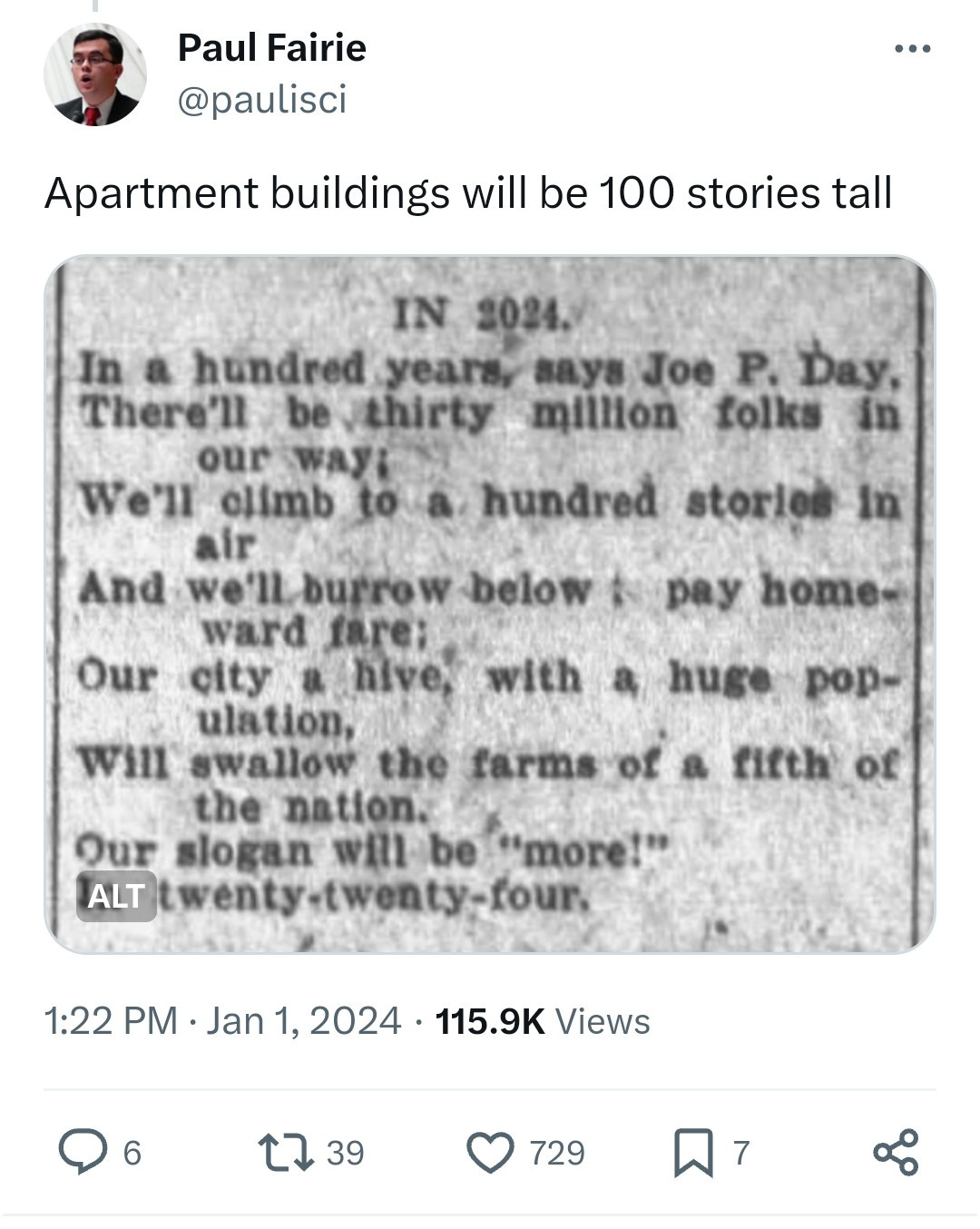

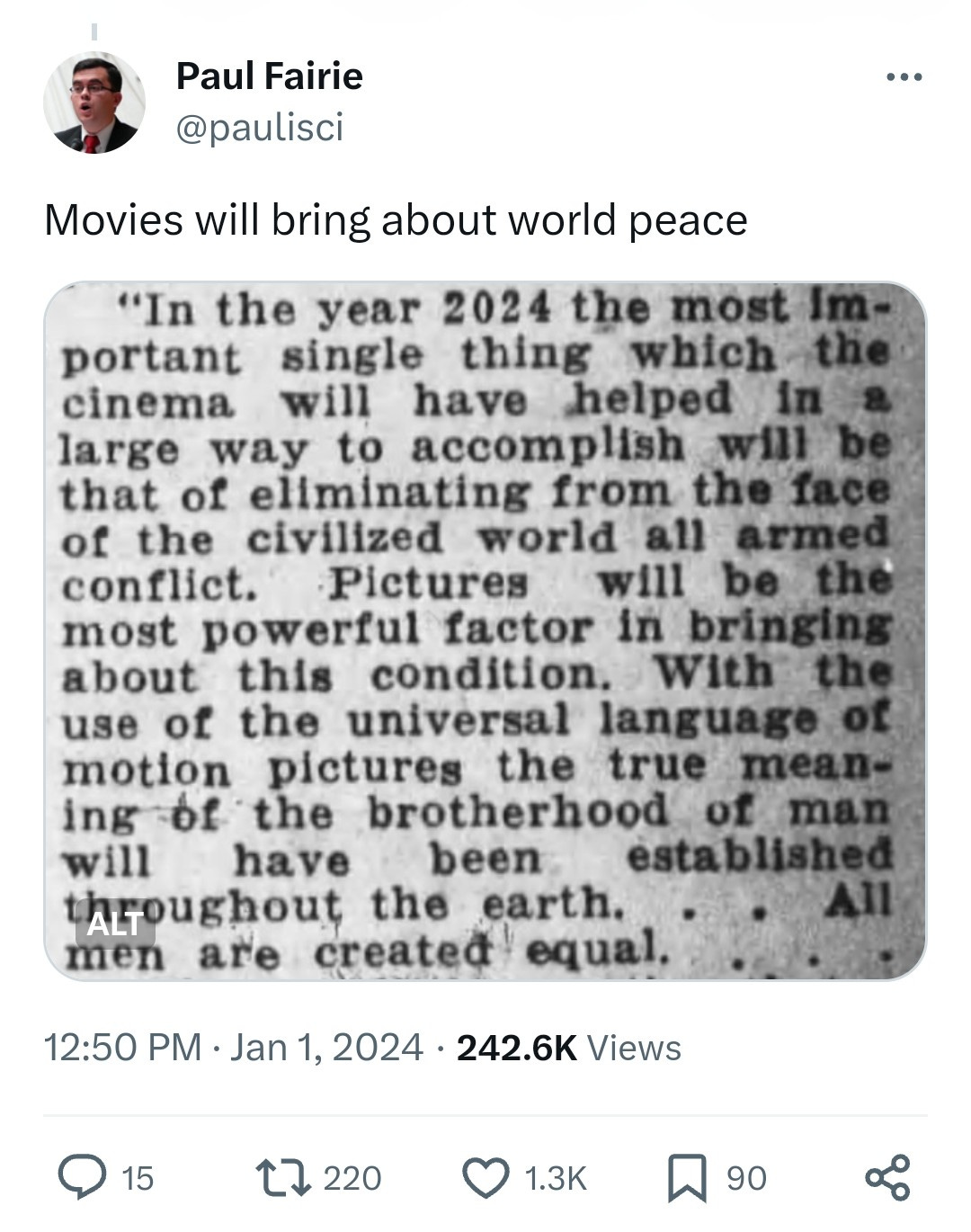

With that in mind, check out the below images. They come from a fascinating X thread about predictions made in 1924 on where humanity would be in 2024. It was posted by a University of Calgary professor named Paul Fairie, who specializes in looking back to see how people in the past understood the future.

Here’s some of what he found was being said about 2024 back in the year 1924 (apparently they were pretty obsessed with us).

Actually their forecasts were…not awful. I can’t imagine we’d do much better for 2124.

Pretty much there, except for that last part

VR!

The pandemic got us there four years early

Kinda want

So, LA

Speak for yourself

Well ahead of schedule on this one

So they predicted Barbie

The Mind and Iron Totally Scientific Apocalypse Score

Every week we bring you the TSAS — the TOTALLY SCIENTIFIC APOCALYPSE SCORE (tm). It’s a barometer of the biggest future-world news of the week, from a sink-to-our-doom -5 or -6 to a life-is-great +5 or +6 the other way. Last year ended with a score of -21.5 — gulp. But it’s a new year, so we’re starting fresh — a big, welcoming zero to kick off 2024. Let’s hope it gets into (and stays) in plus territory for a long while to come.

THE NEW YORK TIMES FIGHTS BACK AGAINST AI SWIPING THE WORK OF ITS JOURNALISTS: Aw yeah. +4

A HARVARD STUDENT CREATES A HUMAN-CENTRIC HOTLINE TO BRING MORE KNOWLEDGE TO THE MIDDLE EAST DEBATE : More of this, please +3.5