Mind and Iron: A Pro Gambler Is Taking on an AI for March Madness

A "Matrix"-like showdown. Also, the artificial flavors of synthetic data

Hi and welcome back to another sparkly episode of Mind and Iron. I'm Steven Zeitchik, veteran of The Washington Post and Los Angeles Times and lead flavor-taster at this journalistic Van Leeuwen.

Every Thursday we hit your inbox with the latest in tech and future news, telling you why it matters and why you should be enthralled/worried/optimistic/contemplating a move to Tasmania. Please consider supporting our independent mission.

We've got two scoops of newsy ice cream this week. One more technical — but it matters! really! — and one more fun. (But it matters too! Really!)

The latter concerns the national obsession that is March Madness, which we totally, absolutely, undeniably do not spend too many hours on this time of year. The NCAA men's and women's basketball tournaments are an endeavor at which AI has been trying to get smarter than humans for a while now — ah, that eternal ancient-Greek quest for a better bracket — and this year it may have a new trick up its sleeve.

Also, you probably haven't heard of synthetic data. But you'll soon be affected by synthetic data. What is this strange concoction and why are tech companies falling over themselves to scoop it up?

Housekeeping note that we’re away next week, back at you with all the doozies the week following.

First, the future-world quote of the week:

“We’re not a crystal ball. But data, we feel, will beat humans.”

— Alan Levy, an entrepreneur who has designed a sports-prediction model he says can defeat a pro sports gambler

Let's get to the messy business of building the future.

IronSupplement

Everything you do — and don’t — need to know in future-world this week

Machine KenPom; Handsy with Gretel

1. THE IDEA OF AI SOLVING OUR DEEPEST CLIMATE CHALLENGES and thorniest geopolitical problems has been waved about pretty tantalizingly lately. So surely it can correctly throw a dart at a few college basketball games?

You'd think. But sports results present a knotty challenge for AI, because events like March Madness combine reams of data (how DO the double-digit seeds fare historically when their three-point shooting percentage is ranked at least as high as their defensive efficiency?) with the utter caprice of human beings stumbling around on a squeaky floor (how WILL Cooper Flagg's ankle hold up). The latter has variables even those closest to the game can't know, as there has never been another Cooper Flagg, and he only has two ankles.

Confronted with this challenge, machine-intelligence has so far offered us...something. Not everything. But something. One case in point comes from our own research. Last year at this time, you may recall, we took a bracket generated by a relatively sophisticated March Madness AI model and tallied up its score among the 31 entrants to the pool we run every year. The result was solid if not vanquishing: the AI finished tied for sixth place.

As our piece had it at the time:

"My goal with this experiment was to see whether a set of outcomes overflowing with variables — something humans struggle to predict, relying too much on intuition and heuristics — could be forecast better by a machine intelligence. By this standard, the AI both performed impressively and utterly flopped. The impressive part came with the fact that it did its job very respectably. If you were submitting a sheet and were hoping for a decent score, the AI would have helped, putting you in the standings’ top 25 percent. (Assuming no one else was using AI.)

"But if you wanted it to do something humans couldn't, see what we couldn't see, you were out of luck. My pool is mostly ordinary duffers; they're not basketball savants. And yet six people could predict the tournament as well or better than an AI could."

Now, ideally models get better, while college basketball stays the same. So you'd expect that this year — with OpenAI's o1 and Google's Gemini 2.0 and all the other reasoning advancements of the past 12 months — the AI might truly challenge for the top spot.

That's the experiment undertaken by an entrepreneur named Alan Levy, who has an AI-data company called 4C Predictions (full disclosure: I hadn't heard of it before). In what is a pretty shameless stunt, albeit also an extremely fun one, Levy is pitting his own model against Sean Perry, a gambler-influencer type with a decent track record in sports wagering.

Levy is betting a million dollars that his AI can beat Perry. And over the next 2 1/2 weeks, we'll find out if he's right. (You can follow the whole shebang here.)

Such contests certainly merit headlines — those who've been charting AI's progress might recall Watson successfully challenging "Jeopardy" champions some 15 years ago. These human-constructed games — predicting a basketball tournament, solving clever clues in the form of a question —are often how we measure intelligence. So these showdowns tells us how much we're losing ground to the machine (or, how solid our technological progress has been lately, if you're feeling charitable).

If we can devise a machine that can figure out future outcomes better than we can, or at least can help us so we figure it out better together, well then God bless this new age of intelligence (or God save us from this new age of intelligence, if you're feeling uncharitable). And so we arrive at the attempted decipherment of the enigmas and mysteries of our annual hoops tournament. Not since the Harlem Globetrotters took on the robots in "Gilligan's Island" has man-versus-machine on a basketball court seemed so consequential.

But a couple points are worth making before the bold takeaways that AN AI IS BETTER THAN ALL HUMAN GAMBLERS or, conversely, NO MACHINE CAN MATCH HUMAN PREDICTIONS start coming at us. A couple points, really, on why we should be taking this experiment with a grain of salt. And how we should actually be thinking about AI when it comes to advising us on unfolding events.

First, it bears noting that there is a lot of entropy in Division I college basketball games — all kinds of matchup factors and injury factors and scheduling factors and coaching unknowns and other squishy variables that even the most statistically well-fed model can't account for. So the chances to go astray are many, for a model or a machine. These x-factors make any one pool player, human or machine, not entirely reliable — a bad "dictator," in game-theory terminology. This isn't chess or Go, where your strategy directly affects the outcome. This is March Madness, where your strategy mainly affects your self-delusion.

Second, there are a lot of possibilities in a Division 1 College basketball tournament. Just, mathematically, on the sheet. You'd think there would be, what, a few thousand possible brackets? Maybe ten thousand? In fact there are 9,223,372,036,854,775,808 possible brackets. That puts any pool player, man or machine, at a disadvantage, since they only get to fill out one. A further reason we may not want to extrapolate too much from these results.

But the biggest reason this is a flimsy experiment — and the point that gets to a bigger misconception around AI usage — is not just that there's a limited set of outcomes and thus a reduced mathematical odds. It's that AI, specifically and by technical design, works best when there are an almost endless set of outcomes. When there's not one correct sheet but many hypothetically correct sheets in all the parallel March Madness universes.

We are asking AI here to take one shot at a distant target. But that's an artificial experiment, because AI predictions and decisionmaking are by their very engineering about taking as many shots as possible.

But wait, isn't one shot at a target exactly what AI is doing when it's, say, making a medical diagnosis — predicting the likely outcomes for one patient? Well, yes. And no. The success of AI won't really be measured that way. The success of AI in diagnostic medicine, or decisionmaking guidance, or any of the many realms we'll use it to take over the thinking for us will be judged on very different terms.

In basketball-land there is one way March Madness can play out. But in, say, pulmonary medicine, there are a million asthma patients in a given U.S. state and thus a million ways their situations can play out. AI (if the models work) is simply good at getting as many of those million outcomes right as possible. And, if they really work, getting more of them than a human would.

That is, the test of whether an AI diagnostic tool works is if you put the best doctors in a room and the best AI model in a room, which will get the diagnosis right for the greatest percentage of that group of million patients? The AI may not give the best diagnosis for any single asthma patient. But we're not playing that game. We're playing a game of whether the AI is going to get more of that million right than humans do.

Probability theorists would call this a manifestation of the Law of Large Numbers: If you run a test on as big a data set as possible, you'll get the most accurate outcome possible. A good AI trying to figure out the best diagnosis for a million asthma patients is pretty much this idea embodied — it will, over the course of its many predictions, get as best a result as we can. And so we should use the AI if it hits a higher average than humans.

But a test in which an AI tries to predict the outcome of a single March Madness tournament is doing the opposite of all this. It is trying to predict the outcome for one asthma patient. And the accuracy of that single prediction is not very telling at all.

All of this leads to the idea that the Perry Test isn't very useful here or, really, for any one-off event (a presidential election comes to mind). I mean you can use it, but it will be like using your smart college-buddy Rob. Maybe he's right; maybe he's wrong like the rest of us. You simply can't count on the accuracy of a prediction for any single outcome.

That said, the Perry Test offers the spectacle of two competitors with radically different backgrounds going up against each other, and that's a very entertaining prospect. The Harlem Globetrotters vs. the Robots, after all, was really fun to watch.

2. ONE OF THE TRULY GREAT THINGS ABOUT AI is how it takes all this real-world data, the English poet Matthew Arnold’s “best of what has been thought and said” (and some of the worst), and processes it to help us see and explain what could not otherwise be seen and explained.

GIGO — Garbage in, Garbage Out, the axiom has it. And the reverse is true too. The more good data we can get, the better an AI will be trained and thus understand our world and guide us through it.

So why are tech companies suddenly scrambling to buy all this fake data?

The point was underscored this week by news that Nvidia will buy Gretel, a so-called “synthetic data” company, for as much as $320 million. Gretel is a small firm — only about 80 employees. But it offers access to a whole bunch of synthetic data. And thus attracted the attention and checkbook of one of the world’s hottest tech companies.

Synthetic data is in many senses like the real thing — information about everything from human spending behaviors to voting preferences to medical history to driving patterns that can be used to train an AI. With one catch: none of it ever happened. Instead, the machine generates all these behaviors as if they might have happened, then that in turn is fed into the model. A machine infers from human behavior, so that another machine can then infer from its inferences.

Yes, synthetic data is the nesting doll of computer programming.

The reason it’s become so useful is that banks, hospitals and other institutions run into all kinds of privacy issues with real-world data; there are limits to what you can use if you want to avoid legal violations. (Ditto with media applications, considering that the whole question of copyrighted material is in litigation limbo.) Synthetic data offers no such issues, since none of what’s being ingested ever actually happened to anyone. A company called Syntegra helped the National Institutes of Health generate data representing nearly three million people during the height of Covid without using their actual records. Many of the same health benefits, none of the surveillance drawbacks.

The other reason synthetic data has become so useful is that there is, quite simply, a shortage of real-world data. The “data-scarcity problem,” in industry lingo. You wouldn’t think this is the case, given the amount of noise that came at you just in your social media feed last Tuesday. But it’s true — there’s simply not enough data left to train AI models. Much of what’s good has already been used; much of what’s still out there isn’t good. So if these systems are going to improve their results, one way to do it is is with an influx of massive amounts of new data. Enter synthetic data, the computing equivalent of lab-grown insulin.

And enter Nvidia’s Gretel purchase, which follows a market that has exploded with at least eight notable startups over the past few years. Synthetic data is very real business.

But can it really work this way? Can a machine actually generate the data that another machine needs to then generate results? That’s the big open question. And until we start training models on synthetic data in earnest and deploying them in the world we won’t know the answer.

Certainly there would seem to be all sorts of potential hazards — X-factors in which a computer guessing at what people would do isn’t the same as what they actually did. At a SXSW panel earlier this month, a Texas State University professor noted one easy, local example: the bats that regularly emerge from under the Congress Avenue Bridge. Rely only on synthetic data, you might miss outliers like that. And would you want to get into a self-driving car that missed the outliers?

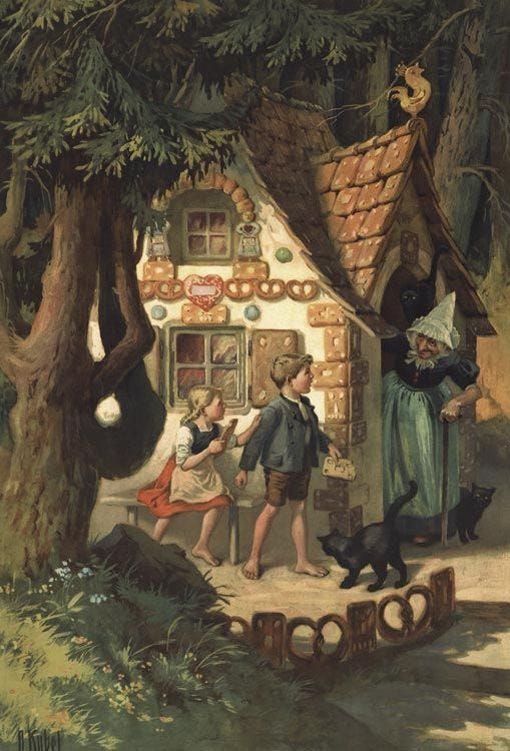

It’s possible the synthetic data of the kind Gretel peddles really does offer a win-win, avoiding privacy/copyright sensitivities while simultaneously making models better. But it’s also possible it doesn’t, offering a promise of more robust models with what is really flawed inferior data. Sometimes the breadcrumbs just lead to nowhere.

The Mind and Iron Totally Scientific Apocalypse Score

Every week we bring you the TSAS — the TOTALLY SCIENTIFIC APOCALYPSE SCORE (tm). It’s a barometer of the biggest future-world news of the week, from a sink-to-our-doom -5 or -6 to a life-is-great +5 or +6 the other way. This year started on kind of a good note. But it’s been pretty rough since. This week does not reverse the trend.

WHAT DOES A MARCH MADNESS AI TEACH US? Less than we think. -1.5

THE SYNTHETIC DATA REVOLUTION IS UPON US: If only we knew what it would bring. -2.5