Mind and Iron: Can AI predict earthquakes?

Also, Joseph Gordon-Levitt tears it up on AI and Trump

Hi and welcome back to another tangy episode of Mind and Iron. I'm Steven Zeitchik, veteran of The Washington Post and Los Angeles Times, senior editor of tech and politics at The Hollywood Reporter and current high-score holder at this newsy video arcade.

Every Thursday we offer you the best (and worst) of what the future is bringing, and the sharpest ways to think about it. Please consider joining our community.

A hellish and hellishly busy week, so we'll get down to it. We've got two juicy items for you today. The first involves a column that Joseph Gordon-Levitt, the Hollywood hyphenate and fellow Substacker (check out his excellent Joe's Journal here), wrote for me at THR in which he laid out in surgically precise detail the issues that modern AI presents from ethical, legal and creative standpoints. It came a day after the Oscar-winning director Daniel Kwan made comments about how our entertainment landscape could be eviscerated if some former antagonists don't bond together. We'll bring you all that gunpowder and lead.

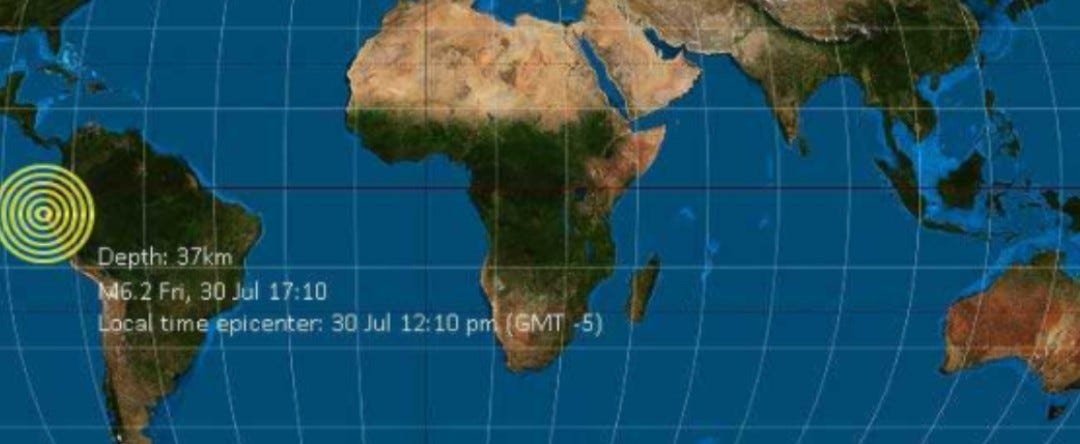

Pivoting in a very different direction, this week also brought the specter of natural disaster with the Russian earthquake and tsunami threat that followed. Climate apocalypse is invariably a part of our future-scape. Can AI tools keep up to warn us? We'll take a dive into the waters of some potentially life-saving — but hardly clear-cut — technological breakthroughs.

First, the future-world quote of the week:

“The decisions we make today really could commit us to a future where any valuable work done by any human being will become fair game for a tech company to hoover up into its AI model and monetize, while that human being gets nothing.”

—Joseph Gordon-Levitt, on what’s at stake in the AI Copyright battle

Let's get to the messy business of building the future.

IronSupplement

Everything you do — and don’t — need to know in future-world this week

Hollywood takes its knives out on AI; How machines thinking could save us from the next earthquake

1. A FEW WEEKS AGO, WE SUGGESTED THE METAPHOR OF A FARMSIDE JUICE STAND to explain the dynamic happening with AI copyright.

I thought it was pretty solid — you're over here selling your juice, someone who hasn't farmed a day in his life comes along, takes your fruits, drops them in a blender and says that because they've also dropped some other fruits into their machine they don't have to pay you a penny.

Joseph Gordon-Levitt may have just one-upped that metaphor though, and sticking with the agrarian theme to boot. In the THR op-ed, the actor, filmmaker, tech-policy savant and general humanist advocate furnished this example:

"A long time ago, kings owned all the land, while serfs worked that land without owning anything. Back then, if a serf had said, 'Hey, I think this little plot of land where I built my house and farm my crops should belong to me,' he would have been laughed at.

'Oh yeah, how’s that going to work?' the king would have asked. 'Is every one of you going to own your own little plot of land? Will you little people be able to buy and sell land to one another? How are you going to keep track of who owns what? Obviously, none of this is doable.'"

The idea of course is that the Middle Ages’ land is today’s data. And while it's very easy for tech companies to take the feudal-landowner approach that giving that land to the user would simply be unheard of, our modern eyes would never accept this about an actual field, so why should we accept this about anyone coming to till our personal info and creative output? "The decisions we make today really could commit us to a future where any valuable work done by any human being will become fair game for a tech company to hoover up into its AI model and monetize, while that human being gets nothing," Gordon-Levitt wrote.

Gordon-Levitt lays out the charred landscape we'll then be left with if we allow those assets to be swept up by the tech barons, as Donald Trump's recent AI Action Plan seems to do. In addition to everyone who already created something getting ripped off, Gordon-Levitt writes, there would also be no incentive for anyone to create anything new. "Our media landscape and public square will become absolutely devoid of anything but algorithmically regurgitated slop optimized for attention maximization and ad revenue," he concludes.

And he doesn't stop with we effete white-collar creator types. This kind of skill-appropriation would happen to (and potentially cause the economic collapse of) all kinds of ecosystems; an automated "plumberbot," for instance, would be trained on all the knowledge and abilities of every plumber that came before and yield the same destruction as automated image and writing programs.

Gordon-Levitt provides some pathways for hope, notably looking to the recent bill proposed bipartisan-ly by Democrat Richard Blumenthal and Republican Josh Hawley in the U.S. senate last week that would allow Americans to sue AI companies for such a ripping off. (Hawley said that “AI companies are robbing the American people blind while leaving artists, writers, and other creators with zero recourse," a line that would sound right at home in the mouths of the WGA and other artist advocates.)

Gordon-Levitt offered his thoughts just a day after my THR colleague Chris Gardner reported that Daniel Kwan — one half of the directing team of "Everything Everywhere All At Once" — presented his own action plan for what to do about the growing influence of AI. If Gordon-Levitt had laid down the intellectual framework for how to think about what's happening, Kwan had come along to give some grassroots solutions based on that framework.

"For the film industry, that means we have to do something unprecedented, which is we have to bring together the studios, the unions, the Academy, agencies, basically everyone, as a unified front against the tech industry,” saying, “We’re putting a line in the ground against another industry that is an invasive species."

As Gardner wrote, "Kwan called this moment 'the tip of the spear,' and suggested that if Hollywood can take action, then many other industries like education could follow suit. ‘I feel like this is what we need to be doing right now before it gets too late. Once it gets integrated, once it takes over everything, we will be in the same place we are with social media, which that it’s is so entrenched in our economy and we can’t regulate it without ruining our economy.’”

The viability of Kwan's plan for these disparate Hollywood entities to unite is deeply uncertain; the entertainment business often struggles for an agent and a producer to agree, so that kind of dog-cat kumbaya seems...difficult. And even if they do, we should be frank about whether that will still be enough in the face of an industry with a lot more financial and lobbying clout. As is the case for many industries when they come up against Big Tech.

As for Hawley-Blumenthal, whether such a bill would gain enough support on both sides of the aisle to pass is a very open question given that many Republicans in the current Congress would not want to endorse anything that even remotely strengthened consumer protections, while Big Tech makes plenty of campaign contributions to Democrats too.

So no tactics are foolproof, and all attempts are fraught.

And yet this week still felt like a landmark moment, when two tech-savvy people (Gordon-Levitt has been immersed in AI and speaking about it at the likes of the UN) and Kwan (who knows a lot about AI even though contrary to perception did not use the tech for his best picture winner) went very public to describe the dangers. That's important because of the platform they hold and the respect they command.

We may yet see the big-screen age of the digital human and the demise of the flesh-and-blood celebrity. But right now we still have plenty of the latter. And two of the bigger ones just used their voices to urge a whole lot of caution about the world we're rushing into.

2. WATCHING TSUNAMIS RUMBLE FIRST TOWARD HAWAII AND THEN THE WESTERN UNITED STATES THIS WEEK, many of us had competing thoughts.

The first: "It's amazing that we can predict the exact moment these destructive waves will reach land with such eerie accuracy."

The second: "How come we still have zero way of predicting natural disasters like earthquakes in the first place?"

Earthquakes, after all, are complete black swans; we have pretty much no warning when one is coming. Tornado prediction is a little better, but only a little; the "hatched" approach can project a 10% probability of one forming within 25 miles, hardly useful if you’re trying to decide whether to scoop up Toto and make a run for it. Flash floods of the kind that caused the horrible tragedies in Texas a few weeks ago are a total crapshoot, based on a host of unknowables.

Hurricanes are probably the most projectable, but most of us are also familiar with all the variables that can last-minute alter a storm’s course or severity, spelling the difference between Katrina and a non-event.

The question is: can we do better? The ability to predict weather patterns has been improving even before AI; meteorologists can now predict weather five days out as accurately as they could only three days out in the 1980's. And now we have modeling we didn't have even five years ago; we have giant data sets no human could previously have compiled; and we have machine intelligence that can potentially see patterns where we just saw clumps of dots. Could we have known the 8.8-level earthquake was going to hit Kamchatka Peninsula this week, and should we equally have known that, unlike a similarly scaled earthquake 70 years ago, it wasn't going to cause damage in Hawaii?

Certainly a lot of high-octane people think we could. The movement for this new prediction industry is gathering from multiple directions. The UN in November launched an initiative to study how AI can be deployed to predict climate change. A Chinese study is applying brain-scan AI — the same thing radiologists are using to spot tumors the human eye might miss — to weather patterns, with at least some early success. Money is coming into plucky companies like the earthquake-predictor SeismicAI, while a pedigreed startup named WindBorne is aiming to make long-term weather forecasts.

Local meteorologists are applying more AI in their forecasts instead of relying on traditional and expensive physics-based models, particularly when it comes to homing in on hurricane landfalls and tornado eyes. Until now that has been geographic estimation; the idea here is that it can get pin-pointy. That’s what happened with Hurricane Milton last year, as Florida scientists deployed an AI model that didn’t need reams of real-time data that can lag — they used an AI that was programmed on the patterns of past storms to know quickly and exactly where it would hit.

This exploratory stuff is literally happening as we speak: a study from University of Western Ontario researchers published earlier this month found 86,000 (!) earthquakes under Yellowstone since 2008, about ten times more than previously thought and a phenomenon that at least partly explains why half the clothes from your kid's teen-tour suitcase went missing.

And in the alchemy department — or maybe it's the polio vaccine? — UT Austin researchers earlier this year revealed findings that by gathering a whole bunch of electromagnetic data on site and then putting it through an AI model that uses an algorithmic technique called the random forest approach, earthquakes could be predicted a full week away with 70% accuracy (They tested it over months in a seismically active region of China.)

What all of this adds up to (the optimists hope) is a world in which the shock events that cause widespread devastation will be a little less shocking — the model will tell us as reliably as tomorrow afternoon’s baseball-game weather. And then we can plan accordingly. The full application of the quant/machine-learning muscle, in other words, against the enemy of climate disaster. Which as we know is getting stronger as we speak.

If it seems far-fetched — "no one can predict exactly when an earthquake will strike," we say reasonably — recall we once said the same thing about solar eclipses (and in fact they were once dreaded with a lot of the same fear). Now we book tours to maximize totality. Today's threat is tomorrow's shrug.

Yet that doesn’t mean we’re close. Everybody talks about the weather but nobody does anything about it, the writer Charles Dudley Warner said, and it remains to be seen what exactly is being done here. AI doesn’t have a long history of even being relied upon to make these calls — we still mostly use the good ol’ physics method — let alone any track record of success.

And even if it does, the induced-demand theory — build another highway lane to ease traffic, eventually traffic worsens as more people drive — is at risk of happening here. Minute knowledge of where the next disaster is coming from might make us less, not more, inclined to do something about climate change — if you can predict the next existential threat so well, maybe you don’t feel as strong an urge to prevent it.

And finally, while a 70% seven-day earthquake accurate rate is a lot better than the current seven-day accuracy rate (of exactly 0 percent), it's not as simple as saying every percentage-point gain is a valuable one. These predictions will get released in real time, and what happens to the 30% it didn't get right — how much does it doom people who relied on a no-danger prediction, while what about the chaos from all those in the same inaccuracy zone who jumped into action unnecessarily? More data is always better in theory, and often full of unintended consequences in practice.

I mean, at what percentage would you feel like you should uproot your life to avoid a climate disaster — somewhere above 1 percent (which pretty much exists all the time) and 99 percent (when we all would). But what’s the tipping point?

And of course we can't ignore how the high-level calculations AI requires guzzles up massive amounts of energy and contributes to climate disasters in the first place. The pill that cleared your artery is also the one that clogged it.

Still, in a sea of questionable use cases — of slop-ified content that doesn't reward its creators; of reading summaries that risk reducing levels of cognition; of surveillance tools that threaten to turn the mere act of leaving the house into an exercise in low-level nanny-state financial penalties — it's encouraging to know that when it comes to one of the biggest challenges of our era, AI is arriving right on time. As Bing Li, the lead scientist on the Yellowstone study, says, "By understanding patterns of seismicity, like earthquake swarms, we can improve safety measures, better inform the public about potential risks, and even guide geothermal energy development away from danger in areas with promising heat flow."

We can rightly catastrophize about all the ways machine intelligence might cause harm and reduce the human. But we can also take comfort in knowing the same technology might, at the very least, help us avoid catastrophe and give a fighting chance to humanity.

The Mind and Iron Totally Scientific Apocalypse Score

Every week we bring you the TSAS — the TOTALLY SCIENTIFIC APOCALYPSE SCORE (tm). It’s a barometer of the biggest future-world news of the week, from a sink-to-our-doom -5 or -6 to a life-is-great +5 or +6 the other way. This year started on kind of a good note. But it’s been fairly rough since. This week? Looking up!

CELEBS ARE STARTING TO SPEAK OUT ABOUT THE RISKS OF AI COPYRIGHT INFRINGEMENT: +3.0

AI SHOWS PROMISE ON CLIMATE-DISASTER PREDICTION: +4.5